Today I read an article in The Industrial-Organizational Psychologist (the colloquial journal published by the Society for Industrial Organizational Psychology) that really resonated with me.

Has Industrial-Organizational Psychology Lost Its Way?

-Deniz S. Ones, Robert B. Kaiser, Tomas Chamorro-Premuzic, Cicek Svensson

Why? Because I think a lot of the points they are making are also true about the field of Psychometrics and our innovation. They summarize their point in six bullet points that they suggest present a troubling direction for their field. Though honestly, I suppose a lot of Academia falls under these, while some great innovation is happening over on some free MOOCs and the like because they aren’t fettered by the chains of the purely or partially academic world.

- an overemphasis on theory

- a proliferation of, and fixation on, trivial methodological minutiae

- a suppression of exploration and a repression of innovation

- an unhealthy obsession with publication while ignoring practical issues

- a tendency to be distracted by fads

- a growing habit of losing real-world influence to other fields.

So what is psychometrics supposed to be doing?

The part that has irked me the most about Psychometrics over the years is the overemphasis on theory and minutiae rather than solving practical problems. This is the main reason I stopped attending the NCME conference and instead attend practical conferences like ATP. It stems from my desire to improve the quality of assessment throughout the world. Development of esoteric DIF methodology, new multidimensional IRT models, or a new CAT sub-algorithm when there are already dozens and the new one offers a 0.5% increase in efficiency… stuff like that isn’t going to impact all the terrible assessment being done in the world and the terrible decisions being made about people based on those assessments. Don’t get me wrong, there is a place for the substantive research, but I feel the latter point is underserved.

The Goal: Quality Assessment

And it’s that point that is driving the work that I do. There is a lot of mediocre or downright bad assessment out there in the world. I once talked to a Pre-Employment testing company and asked if I could help implement strong psychometrics to improve their tests as well as validity documentation. Their answer? It was essentially “No thanks, we’ve never been sued so we’re OK where we are.” Thankfully, they fell in the mediocre category rather the downright bad category.

Of course, in many cases, there is simply a lack of incentive to produce quality assessment. Higher Education is a classic case of this. Professional schools (e.g., Medicine) often have accreditation tied in some part to demonstrating quality assessment of their students. There is typically no such constraint on undergraduate education, so your Intro to Psychology and freshman English Comp classes still do assessment the same way they did 40 years ago… with no psychometrics whatsoever. Many small credentialing organizations lack incentive too, until they decide to pursue accreditation.

I like to describe the situation this way: take all the assessments of the world and get them a percentile rank in psychometric quality. The top 5% are the big organizations, such as Nursing licensure in the US, that have in-house psychometricians, large volumes, and huge budgets. We don’t have to worry about them as they will be doing good assessment (and that substantive research I mentioned might be of use to them!). The bottom 50% or more are like university classroom assessments. They’ll probably never use real psychometrics. I’m concerned about that 50-95th percentile.

Example: Credentialing

A great example of this level is the world of Credentialing. There a TON of poorly constructed licensure and certification tests that are being used to make incredibly important decisions about people’s lives. Some are simply because the organization is for-profit and doesn’t care. Some are caused by external constraints. I once worked with a Department of Agriculture for a western US State, where the legislature mandated that licensure tests be given for certain professions, even though only like 3 people per year took some tests.

So how do we get groups like that to follow best practices in assessment? In the past, the only way to get psychometrics done is for them to pay a consultant a ton of money that they don’t have. Why spend $5k on an Angoff study or classical test report for 3 people/year? I don’t blame them. The field of Psychometrics needs to find a way to help such groups. Otherwise, the tests are low quality and they are giving licenses to unqualified practitioners.

There are some bogus providers out there, for sure. I’ve seen Certification delivery platforms that don’t even store the examinee responses, which would be necessary to do any psychometric analysis whatsoever. Obviously they aren’t doing much to help the situation. Software platforms that focus on things like tracking payments and prerequisites simply miss the boat too. They are condoning bad assessment.

Similarly, mathematically complex advancements such as multidimensional IRT are of no use to this type of organization. It’s not helping the situation.

An Opportunity for Innovation

I think there is still a decent amount of innovation in our field. There are organizations that are doing great work to develop innovative items, psychometrics, and assessments. However, it is well known that large corporations will snap up fresh PhDs in Psychometrics and then lock them in a back room to do uninnovative work like run SAS scripts or conduct Angoff studies over and over and over. This happened to me and after only 18 months I was ready for more.

Unfortunately, I have found that a lot of innovation is not driven by producing good measurement. I was in a discussion on LinkedIn where someone was pushing gamification for assessments and declared that measurement precision was of no interest. This, of course, is ludicrous. It’s OK to produce random numbers as long as the UI looks cool for students?

Innovation in Psychometrics at ASC

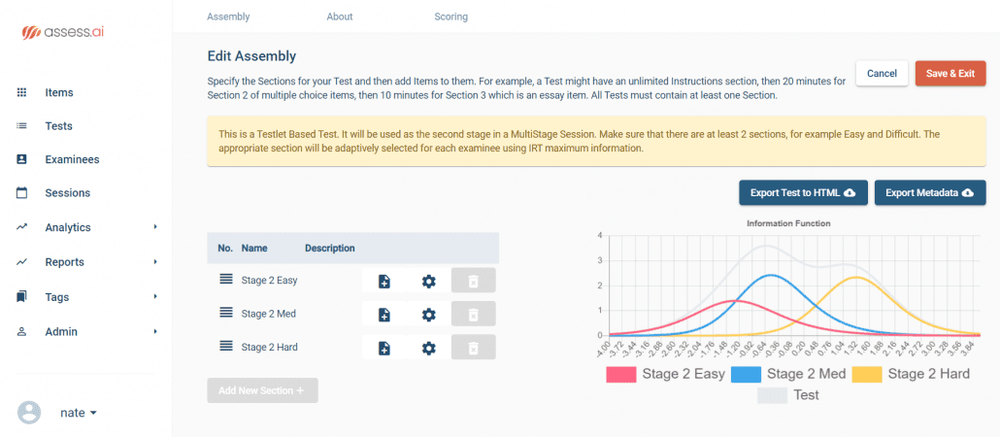

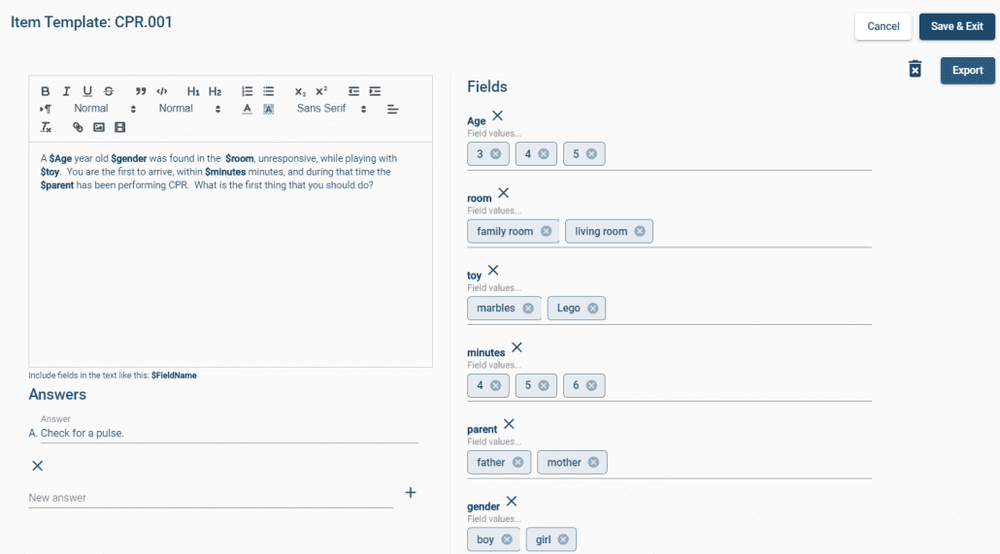

Much of the innovation at ASC is targeted towards the issue I have presented here. I originally developed Iteman 4 and Xcalibre 4 to meet this type of usage. I wanted to enable an organization to produce professional psychometric analysis reports on their assessments without having to pay massive amounts of money to a consultant. Additionally, I wanted to save time; there are other software programs which can produce similar results, but drop them in text files or Excel spreadsheets instead of Microsoft Word which is of course what everyone would use to draft a report.

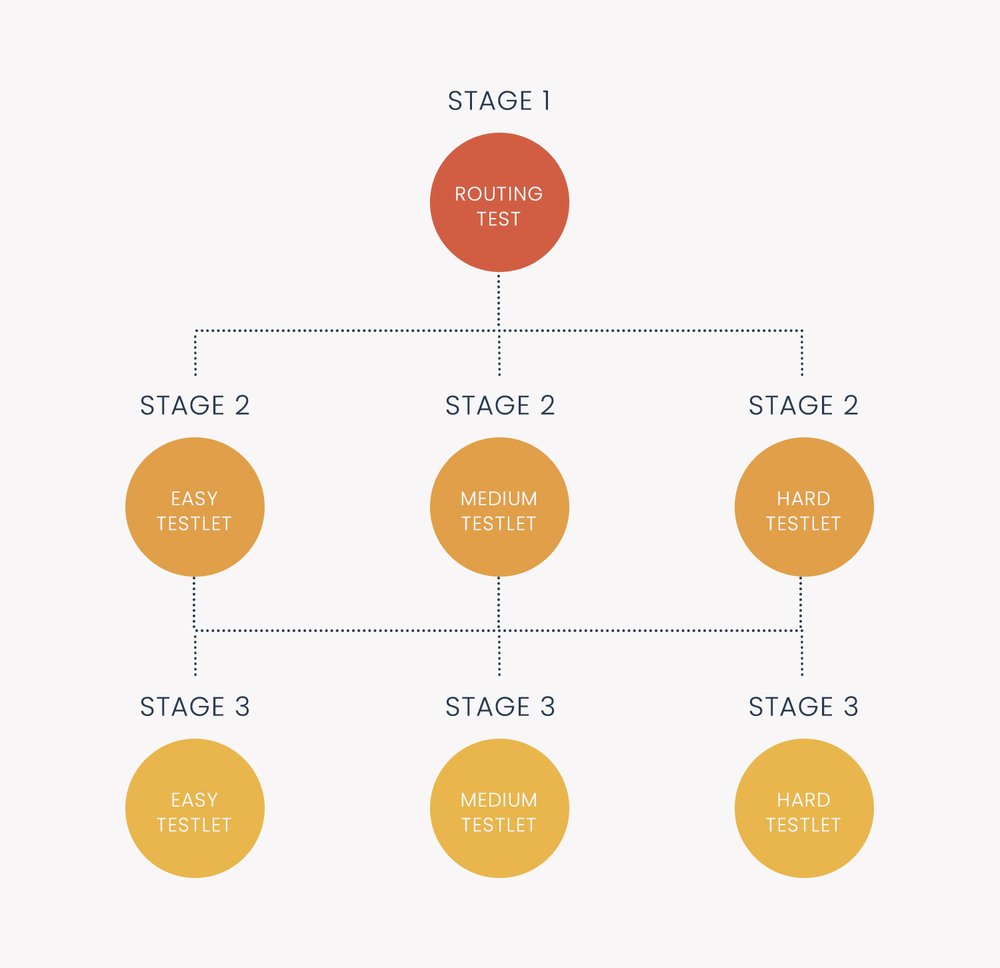

Much of our FastTest platform is designed with a similar bent. Tired of running an Angoff study with items on a projector and the SMEs writing all their ratings with pencil and paper, only to be transcribed later? Well, you can do this online. Moreover, because it is only you can use the SMEs remotely rather than paying to fly them into a central office. Want to publish an adaptive (CAT) exam without writing code? We have it built directly into our test publishing interface.

Back to My Original Point

So the title is “What is Psychometrics Supposed to be Doing?” with regards to psychometrics innovation. My answer, of course, is improving assessment. The issue I take with the mathematically advanced research is that it is only relevant for that top 5% of organizations that is mentioned. It’s also our duty as psychometricians to find better ways to help the other 95%.

What else can we be doing? I think the future here is automation. Iteman 4 and Xcalibre 4, as well as FastTest, were really machine learning and automation platforms before those things became so en vogue. As the SIOP article mentioned at the beginning talks about, other scholarly areas like Big Data are gaining more real-world influence even if they are doing things that Psychometrics has done for a long time. Item Response Theory is a form of machine learning and it’s been around for 50 years!