Want to get a graduate degree in psychometrics, measurement, and assessment? This field is definitely a small niche in the academic world, despite being an integral part of everyone’s life. When I’m trying to explain what I do to people from outside the field, I’m often asked something like, “Where do you even go to study something like that?” I’m also frequently asked by people already in the field where they can go to get a graduate degree, especially on sophisticated topics like item response theory or adaptive testing.

Well, there are indeed a good number of Ph.D. programs, though they have a range of titles, as you can see below. This can make them tough to find even if you are specifically looking for them.

Note: This list is not intended to be comprehensive, but rather a sampling of the most well-known or unique programs.

If you want to do deeper research and are actually shopping for a grad school, I highly recommend you check out a comprehensive list of programs on the NCME website. I also recommend the SIOP list of grad programs; they are for I/O psychology but many of them have professors with expertise in things like assessment validation or item response theory.

How to choose a graduate degree in psychometrics?

Here’s an oversimplification of how I see the selection of education…

- When you are in high school and selecting a university or college, you are selecting a school.

- When you are 18-20 and selecting a major, you are selecting a department.

- When you are selecting where to pursue a Master’s, you are selecting a program.

- When you are selecting where to pursue a Ph.D., you are selecting an advisor.

The key point: When you do a Ph.D., you are going to spend a lot of time working one on one with your advisor, both for the dissertation but also likely for research projects. It is therefore vital that you selected someone who not only aligns with your interests (otherwise you’ll be bored and disengaged) but also whom you quite simply like enough at a personal level to work one on one for several years! This is arguably the most important thing to consider when choosing where to attain your graduate degree.

University of Minnesota: Quantitative/Psychometrics Program (Psychology) and Quantitative Foundations of Educational Research (Education)

I’m partial to this one since it is where I completed my Ph.D., with Prof. David J. Weiss in the Psychology Department. The UMN is interesting in that it actually has two separate graduate programs in psychometrics: the one in Psychology, which has since become more focused on quantitative psychology, but also one in the Education department.

Website: https://cla.umn.edu/psychology/graduate/areas-specialization/quantitativepsychometric-methods-qpm

https://edpsych.umn.edu/academics/quantitative-methods

University of Massachusetts: Research, Educational Measurement, and Psychometrics (REMP)

For many years, if you wanted to learn item response theory, you read Item Response Theory. Principles and Applications by Hambleton and Swaminathan (1985). These were two longtime professors at UMass, and it speaks to the quality of that program. Both have since retired but the faculty remains excellent. Also, note that the program website has a nice page on psychometric resources and software.

Website: https://www.umass.edu/remp/

University of Iowa: Center for Advanced Studies in Measurement and Assessment

This program is in the Education department, and has the advantage of being in one of the epicenters of the industry: the testing giant ACT is headquartered only a few miles away, the giant Pearson has an office in town, and the Iowa Test of Basic Skills is an offshoot of the university itself. Like UMass, Iowa also has a website with educational materials and useful software.

Website: https://education.uiowa.edu/casma

University of Wisconsin-Madison

UW has well-known professors like Daniel Bolt and James Wollack. Plus, Madison is well-known for being a fun city given its small size. The large K-12 testing company, Renaissance Learning, is headquartered only a few miles away.

Website: https://edpsych.education.wisc.edu/category/quantitative-methods/

University of Nebraska – Lincoln: Quantitative, Qualitative & Psychometric Methods

For many years, the cornerstones of this program were the husband-and-wife duo of James Impara and Barbara Plake. They’ve now retired, but excellent new professors have joined. In addition, UNL is the home of the Buros Institute.

Website: https://cehs.unl.edu/edpsych/quantitative-qualitative-psychometric-methods/

University of Kansas: Research, Evaluation, Measurement, and Statistics

Not far from Lincoln, NE is Lawrence, Kansas. The program here has been around a long time, with excellent faculty. Students have an option for practical experience working at the Achievement and Assessment Institute.

Website: https://epsy.ku.edu/academics/educational-psychology-research/phd

Michigan State University: Measurement and Quantitative Methods

Like most of the rest of these programs, it is in a vibrant college town. The focus is more on quantitative methods than assessment.

Website: https://education.msu.edu/

UNC-Greensboro: Educational Research, Measurement, and Evaluation

While most programs listed here are in the northern USA, this one is in the southern part of the country, where such programs are smaller and fewer. UNCG is quite strong however.

Website: https://www.uncg.edu/degrees/educational-research-measurement-and-evaluation-ph-d/

University of Texas: Quantitative Methods

UT, like some of the other programs, has an advantage in that the educational assessment arm of Pearson is located there.

Website: https://education.utexas.edu/departments/educational-psychology/edp-programs/quantitative-methods/

Boston College: Measurement, Evaluation, Statistics, and Assessment (MESA)

This program is involved in international research such as TIMSS & PIRLS.

Website: https://www.bc.edu/bc-web/schools/lynch-school/academics/departments/mesa.html

Morgan State University: Graduate Program in Psychometrics

Morgan State is unique in that it is a historically black institution that has an excellent program dedicated to psychometrics.

Website: https://www.morgan.edu/psychometrics

Fordham University: Psychometrics and Quantitative Psychology

Fordham has an excellent program, located in New York City.

James Madison University: Assessment and Measurement

While not as large as the major public universities on this list, JMU has a strong, practically focused program in psychometrics.

Website: https://www.jmu.edu/grad/programs/snapshots/psychology-assessment-and-measurement.shtml

Outside the US

University of Alberta: Measurement, Evaluation, and Data Science

This is arguably the leading program in all of Canada.

University of British Columbia: Measurement, Evaluation, and Research Methodology

UBC is home to Bruno Zumbo, one of the most prolific researchers in the field.

Website: http://ecps.educ.ubc.ca/program/measurement-evaluation-and-research-methodology/

University of Twente: Research Methodology, Measurement, and Data Analysis

For decades, Twente has been the center of psychometrics in Europe, with professors like Wim van der Linden, Theo Eggen, Cees Glas, and Bernard Veldkamp. It’s also linked with Cito, the premier testing company in Europe, which provides excellent opportunities to apply your skills.

Website: https://www.utwente.nl/en/bms/omd/

University of Amsterdam: Psychological Methods

This program has a number of well-known professors, with expertise in both psychometrics and quantitative psychology.

University of Cambridge: The Psychometrics Centre

The Psychometrics Centre at Cambridge includes professors John Rust and David Stillwell. It hosted the 2015 IACAT conference and is the home to the open-source CAT platform Concerto.

Website: https://www.psychometrics.cam.ac.uk/

KU Leuven: Research Group of Quantitative Psychology and Individual Differences

This is home to well-known researchers such as Paul De Boeck.

Website: https://ppw.kuleuven.be/okp/home/

University of Western Australia: Pearson Psychometrics Laboratory

This is home to David Andrich, best known for the Rasch Rating Scale Model.

Website: https://www.uwa.edu.au/schools/medicine/psychometric-laboratory

University of Oslo: Assessment, Measurement, and Evaluation

This program provides an opportunity in the Nordic/Scandinavian countries, with a program in assessment and psychometrics.

Website: https://www.uio.no/english/studies/programmes/assessment-evaluation-master

Online

There are very few programs that offer graduate training in psychometrics that is 100% online. Here’s the only one I know of. If you know of another one, please get in touch with me.

The University of Illinois at Chicago: Measurement, Evaluation, Statistics, and Assessment

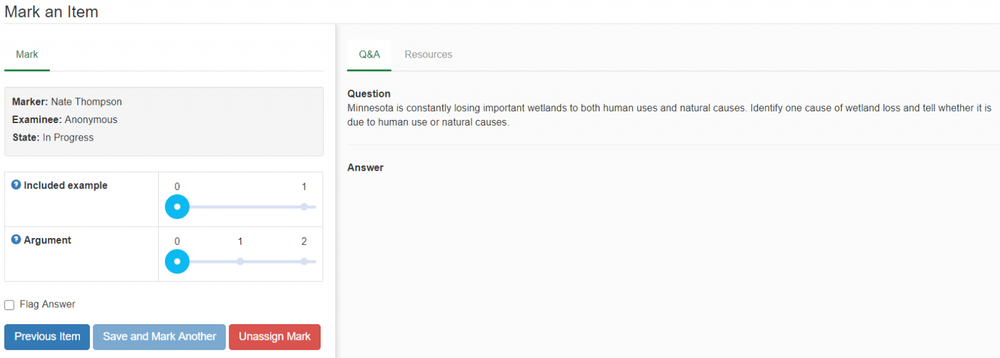

This program is of particular interest because it has an online Master’s program, which allows you to get a high-quality graduate degree in psychometrics from just about anywhere in the world. One of my colleagues here at ASC has recently enrolled in this program.

We hope the article helps you find the best institution to pursue your graduate degree in psychometrics.