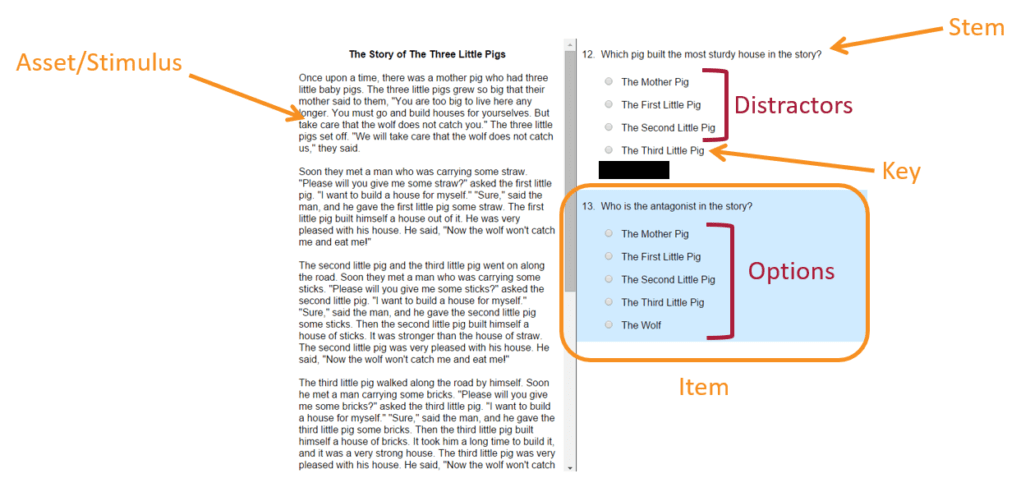

Item banking refers to the purposeful creation of a database of assessment items to serve as a central repository of all test content, improving efficiency and quality. The term item refers to what many call questions; though their content need not be restricted as such and can include problems to solve or situations to evaluate in addition to straightforward questions. As a critical foundation to the test development cycle, item banking is the foundation for the development of valid, reliable content and defensible test forms.

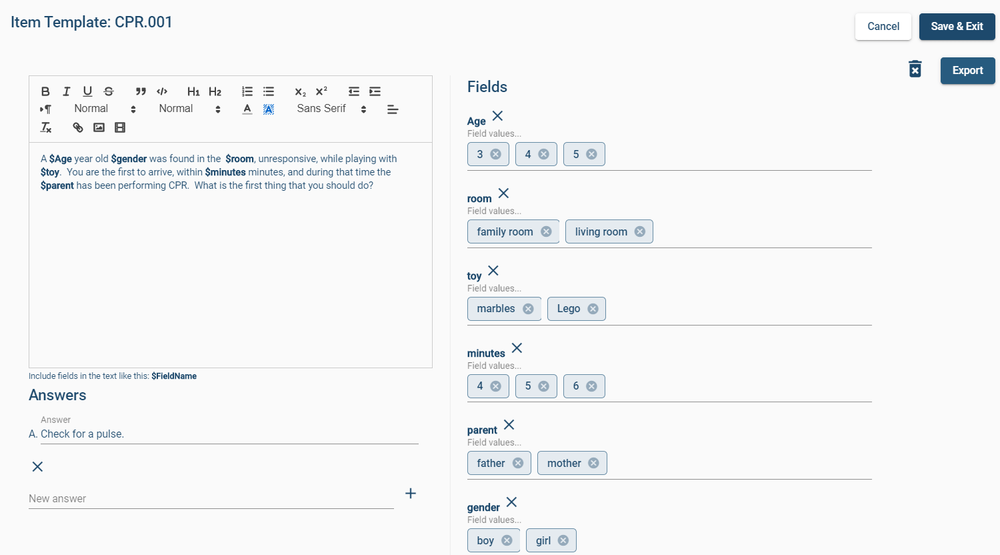

Automated item banking systems, such as Assess.ai or FastTest, result in significantly reduced administrative time for developing/reviewing items and assembling/publishing tests, while producing exams that have greater reliability and validity. Contact us to request a free account.

What is Item Banking?

While there are no absolute standards in creating and managing item banks, best practice guidelines are emerging. Here are the essentials your should be looking for:

Items are reusable objects; when selecting an item banking platform it is important to ensure that items can be used more than once; ideally, item performance should be tracked not only within a test form but across test forms as well.

Item history and usage are tracked; the usage of a given item, whether it is actively on a test form or dormant waiting to be assigned, should be easily accessible for test developers to assess, as the over-exposure of items can reduce the validity of a test form. As you deliver your items, their content is exposed to examinees. Upon exposure to many examinees, items can then be flagged for retirement or revision to reduce cheating or teaching to the test.

Items can be sorted; as test developers select items for a test form, it is imperative that they can sort items based on their content area or other categorization methods, so as to select a sample of items that is representative of the full breadth of constructs we intend to measure.

Item versions are tracked; as items appear on test forms, their content may be revised for clarity. Any such changes should be tracked and versions of the same item should have some link between them so that we can easily review the performance of earlier versions in conjunction with current versions.

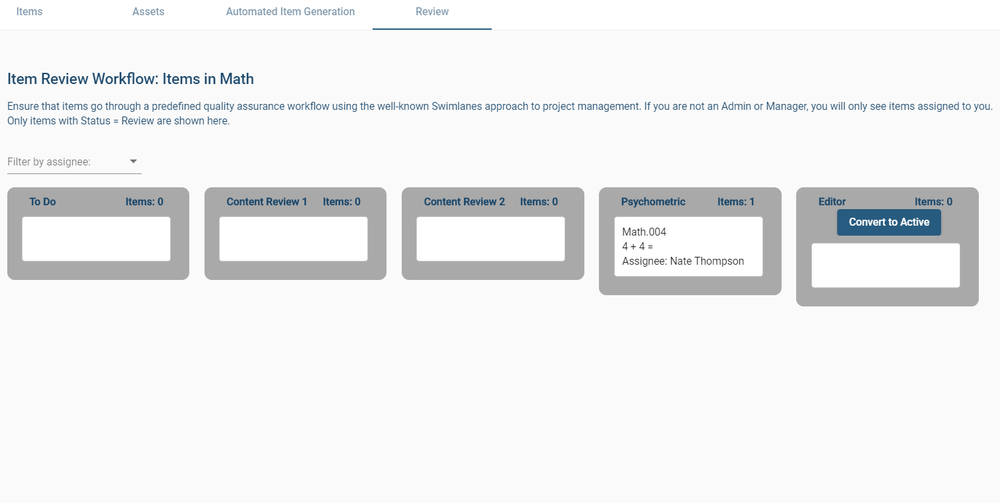

Review process workflow is tracked; as items are revised and versioned, it is imperative that the changes in content and the users who made these changes are tracked. In post-test assessment, there may be a need for further clarification, and the ability to pinpoint who took part in reviewing an item and expedite that process.

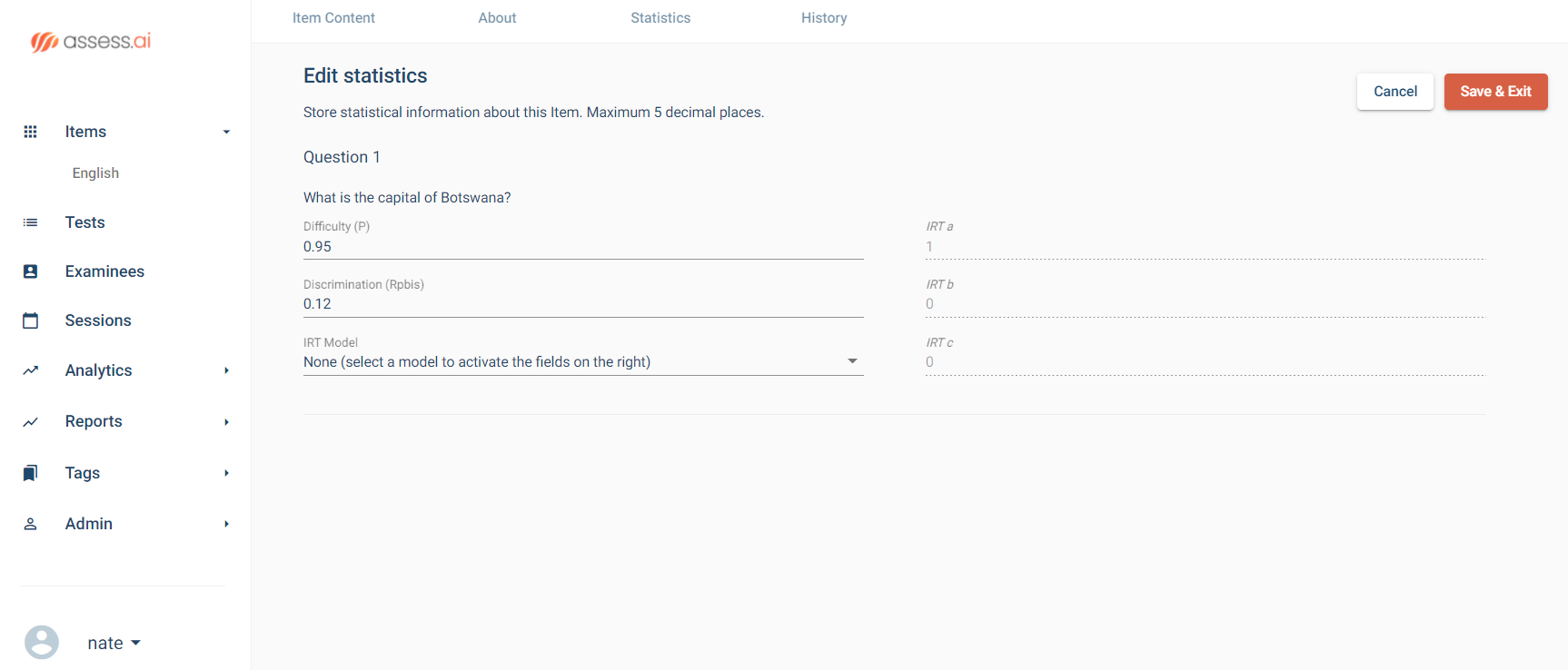

Metadata is recorded; any relevant information about an item should be recorded and stored with the item. The most common applications for metadata that we see are author, source, description, content area, depth of knowledge, IRT parameters, and CTT statistics, but there are likely many data points specific to your organization that is worth storing.

Managing an Item Bank

Names are important. As you create or import your item banks it is important to identify each item with a unique, but recognizable name. Naming conventions should reflect your bank’s structure and should include numbers with leading zeros to support true numerical sorting. You might want to also add additional pieces of information. If importing, the system should be smart enough to recognize duplicates.

Search and filter. The system should also have a reliable sorting mechanism.

Prepare for the Future: Store Extensive Metadata

Metadata is valuable. As you create items, take the time to record simple metadata like author and source. Having this information can prove very useful once the original item writer has moved to another department, or left the organization. Later in your test development life cycle, as you deliver items, you have the ability to aggregate and record item statistics. Values like discrimination and difficulty are fundamental to creating better tests, driving reliability, and validity.

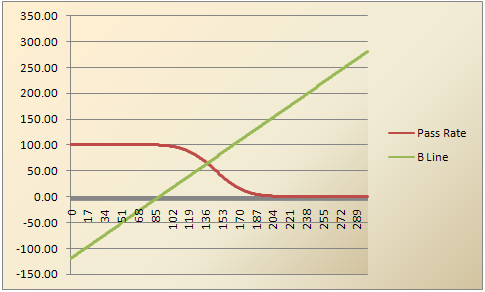

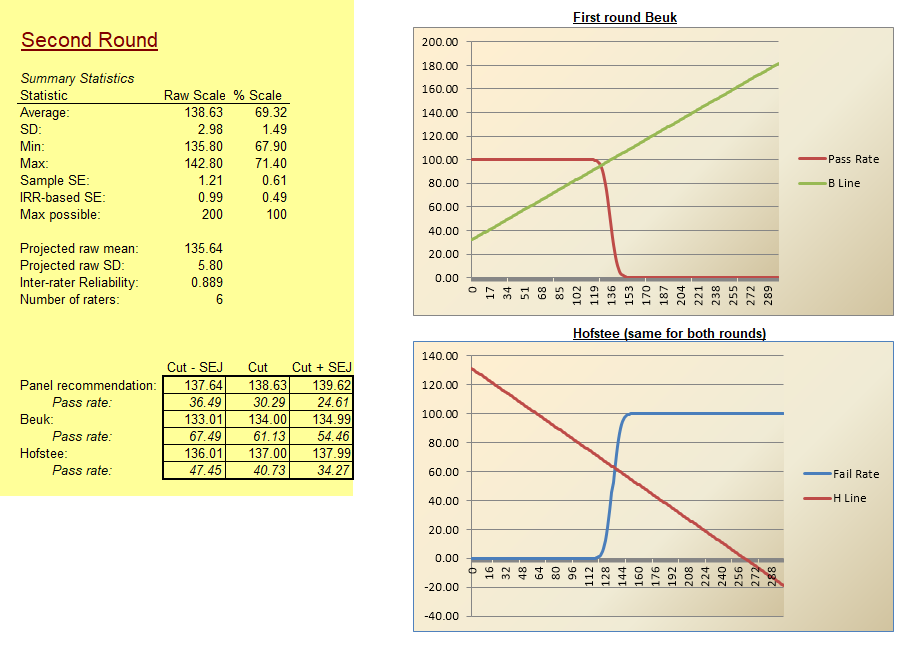

Statistics are used in the assembly of test forms while classical statistics can be used to estimate mean, standard deviation, reliability, standard error, and pass rate.

Item response theory parameters can come in handy when calculating test information and standard error functions. Data from both psychometric theories can be used to pre-equate multiple forms.

In the event that your organization decides to publish an adaptive test, utilizing CAT delivery, item parameters for each item will be essential. This is because they are used for intelligent selection of items and scoring examinees. Additionally, in the event that the integrity of your test or scoring mechanism is ever challenged, documentation of validity is essential to defensibility and the storage of metadata is one such vital piece of documentation.

Increase Content Quality: Track Workflow

Utilize a review workflow to increase quality. Using a standardized review process will ensure that all items are vetted in a similar matter. Have a step in the process for grammar, spelling, and syntax review, as well as content review by a subject matter expert. As an item progresses through the workflow, its development should be tracked, as workflow results also serve as validity documentation.

Accept comments and suggestions from a variety of sources. It is not uncommon for each item reviewer to view an item through their distinctive lens. Having a diverse group of item reviewers stands to benefit your test-takers, as they are likely to be diverse as well!

Keep Your Items Organized: Categorize Them

Identify items by content area. Creating a content hierarchy can also help you to organize your item bank and ensure that your test covers the relevant topics. Most often, we see content areas defined first by an analysis of the construct(s) being tested. In the event of a high school science test, this may include the evaluation of the content taught in class. A high-stakes certification exam, almost always includes a job-task analysis. Both methods produce what is called a test blueprint, indicating how important various content areas are to the demonstration of knowledge in the areas being assessed.

Once content areas are defined, we can assign items to levels or categories based on their content. As you are developing your test, and invariably referring back to your test blueprint, you can use this categorization to determine which items from each content area to select.

Why Item Banking?

There is no doubt that item banking is a key aspect of developing and maintaining quality assessments. Utilizing best practices, and caring for your items throughout the test development life cycle, will pay great dividends as it increases the reliability, validity, and defensibility of your assessment. Moreover, good item banking will make the job easier and more efficient thus reducing the cost of item development and test publishing.

Ready to improve assessment quality through item banking?

Visit our Contact Us page, where you can request a demonstration or a free account (up to 500 items).