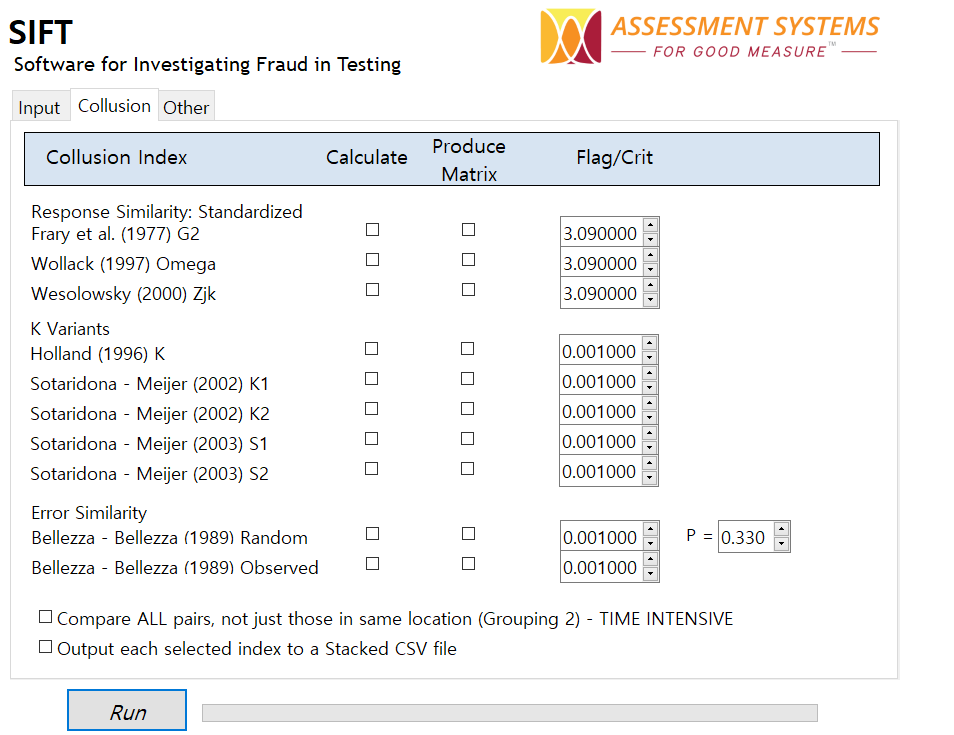

SIFT: Software for Investigating Fraud in Testing

Are you concerned with test cheating and other suspicious behavior? SIFT is free software that implements modern psychometric data forensics to help you find evidence of test fraud:

- Item harvesting

- Collusion (students cheating off each other)

- Undue influence (e.g., teacher help)

- Brain dump sites

- Pre-knowledge

- Low motivation.

SIFT is designed to bring advanced psychometric forensics to any testing organization. There is extensive scientific research on the topic, but if you wanted to do any of that analysis, you historically have had to write the code yourself or hire an incredibly expensive consultant – until now!

Need an expert psychometrician to run SIFT, interpret results, and submit a professional report? We do that too. Get in touch and we’d love to hear about your exams.

Download for free

SIFT is available at no cost. We simply believe that test integrity is a vital component of validity, and encourage you to check the data. Please fill out the form and you will receive an automated email with a link to download SIFT. The download comes with example files and a detailed manual, which also provides explanations of the various analyses.

Because SIFT is free, it is provided without any support, training, or warranties. Prefer to hire a consultant? Contact us and schedule a time to chat with an expert.

Types of psychometric data forensics

Intra-examinee

Is there anything unusual about this examinee unrelated to everyone else? Did they answer “C” to every item? Did they spend 10 minutes on each of the first 5 items and skip the rest? Did they get a high score in a suspiciously short time?

Inter-examinee

Is there anything unusual about this examinee compared to others? Are there nearby examinees that have almost the same response vector? It’s not just whether other students got the same items wrong… but what if they chose the same wrong answers to those items?

Group-level

Group-level analysis provides additional insight. Do certain schools or teachers do unusually well? Do certain test centers have crazy-high pass rates but short test times on average?