Discover how proven healthcare quality management models can improve fairness and reliability in assessments.

I spent the first decade of my career in healthcare, specifically in healthcare quality management. Healthcare is ‘high stakes’; medical errors can have severe consequences. In the world of assessment, we often refer to high-stakes assessments which also have significant consequences. The development of reliable and valid assessments is crucial. The quality of an assessment directly impacts its effectiveness and fairness. By borrowing concepts from quality management, particularly from fields like healthcare, we can strengthen the processes behind assessment design, delivery, and evaluation.

What is the Donabedian Model and how can it be applied to assessment?

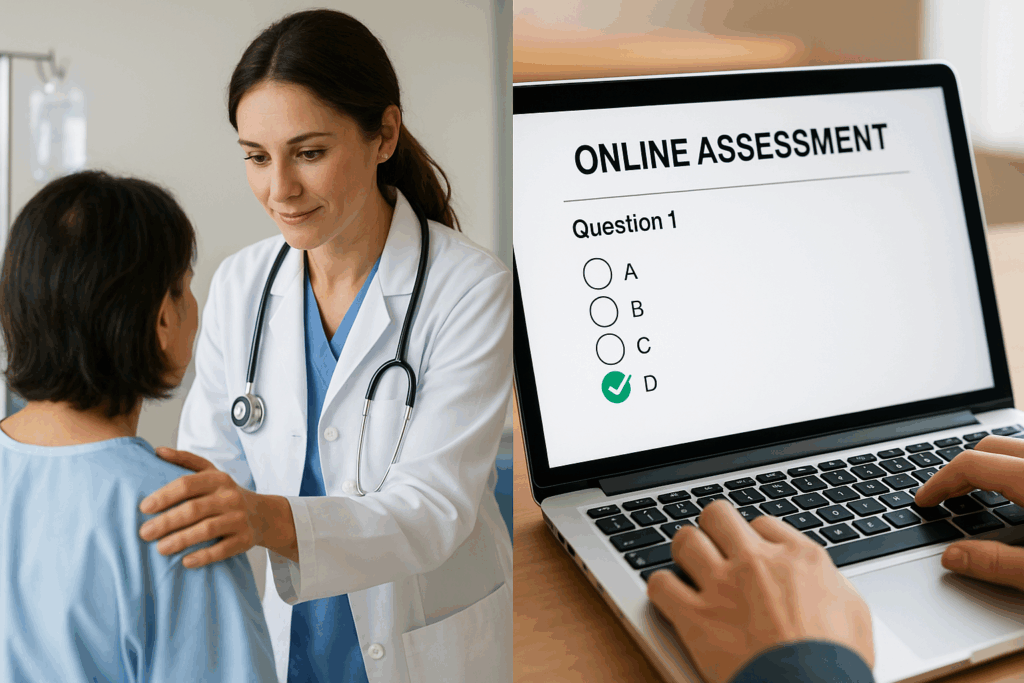

The Donabedian Model, developed by Dr. Avedis Donabedian, is based upon the concept that information about healthcare quality can be drawn from three categories of evidence: the structure of care, the process of care, and the outcome of care.

Application of the Donabedian Model to Assessment

Why it matters: Using the Donabedian model facilitates a holistic and systematic approach to assessment development, encourage the consideration of the structural components that support the assessment program, the processes involved in the creation and administration of assessments, and the outcomes the assessments produce. By applying this model, assessment developers can more effectively identify areas of strength and weakness, ensure alignment with intended goals, and continuously improve both the reliability and fairness of the testing experience.

The PDCA cycle: Continuous improvement

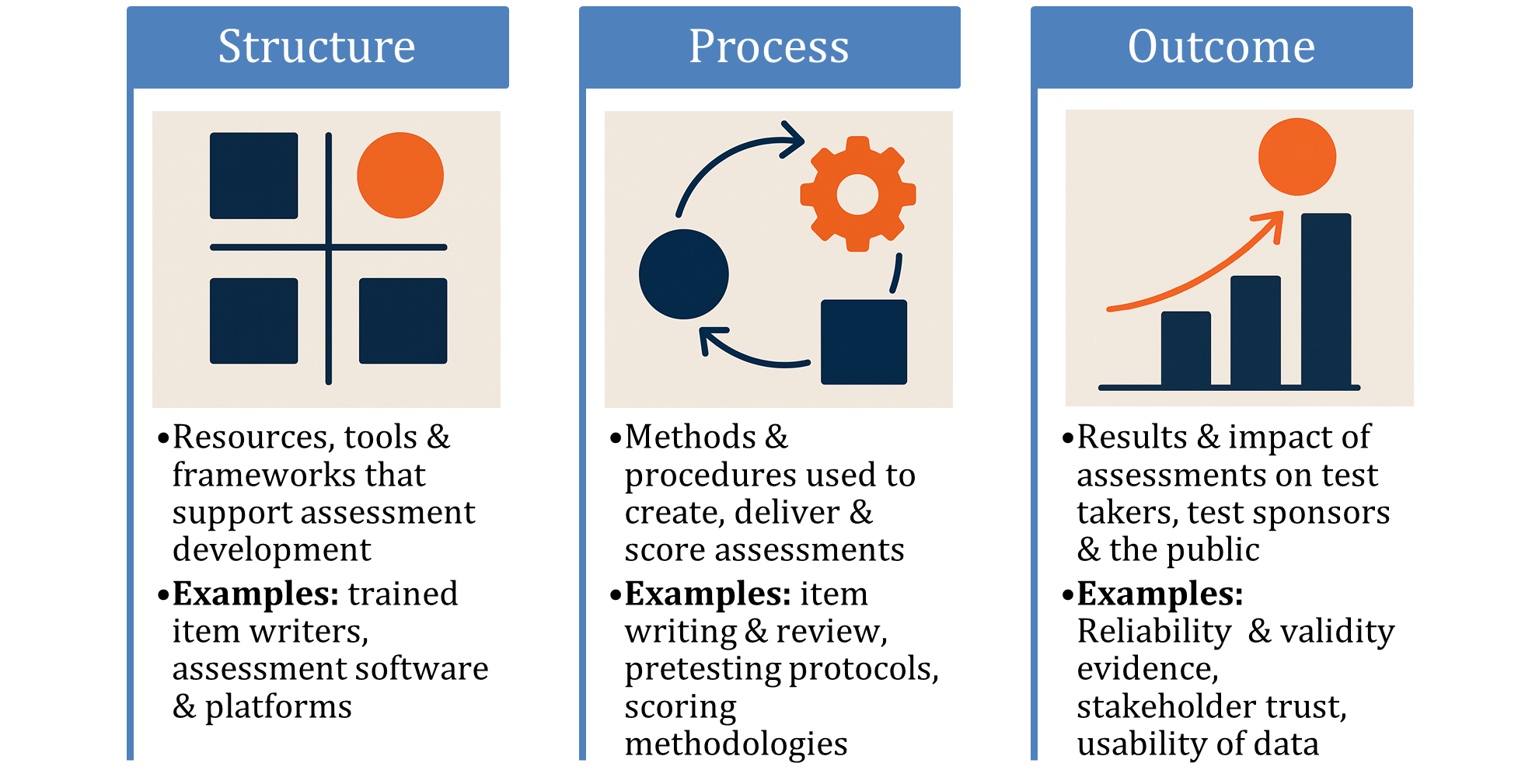

The Plan-Do-Check-Act (PDCA) cycle is an iterative design and management method used for the control and continual improvement of processes and products.

The PDCA Cycle of Assessment Development

Why It Matters: The PDCA Cycle embeds data-informed decision-making into every stage of assessment development, from initial planning and blueprint design implementation, analysis, and iterative refinement. By systematically planning assessments based on clear learning objectives and stakeholder needs, executing with fidelity, analyzing outcomes through data and feedback, and acting on those insights to make targeted improvements, the PDCA Cycle fosters a culture of continuous improvement. This approach ensures that assessments remain valid, reliable, and responsive to evolving stakeholder needs and environmental changes.

Statistical process control: Managing variation

Statistical process control (SPC) is a quality control methodology used to monitor, control, and improve processes through statistical analysis. A fundamental concept in SPC is the difference between special cause and common cause variation. Common cause variation refers to natural, inherent fluctuations that are always present in a process and affects all outcomes of the process. Special cause variation (also called assignable cause variation) is caused by specific, identifiable events that are not normally part of the process. These require immediate investigation and corrective action.

In assessments, some degree of measurement error is also inherent in scores because no test can perfectly capture a student’s true ability, knowledge, or performance. The measurement error that is always present is common cause variation. Special cause variation occurs from incidents such as cheating, item miskeys, and disturbances in the testing environment. Simply stated, common cause variation is expected, random variability in scores while special cause variation is due to unexpected deviations pointing to issues.

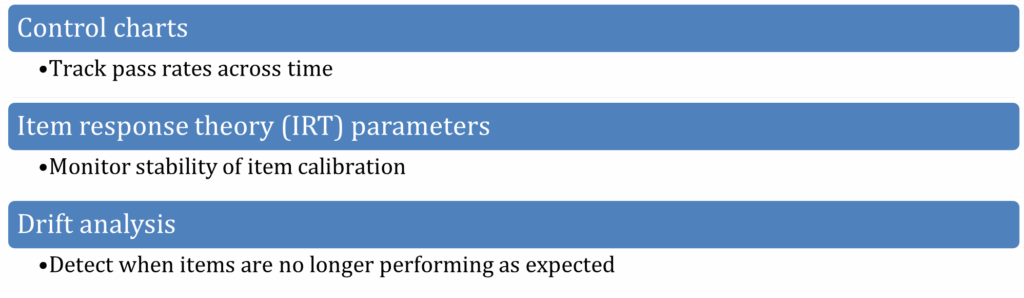

Examples of SPC Tools in Assessments

Why It Matters: SPC allows assessment teams to distinguish between natural score variations and meaningful anomolies that need intervention – enhancing fairness and test reliability. This distinction is critical for maintaining the integrity and fairness of assessments. By using SPC concepts, teams can monitor trends in scoring data over time, detect irregularities such as sudden score spikes, drops, or patterns that fall outside of what’s expected, and investigate potential causes like compromised test security, technical issues, or item errors. Both both the reliability of score interpretations and the fairness of the assessment process across diverse test-taker populations are enhanced.

Conclusion: A Quality-Centric Approach to Assessment

Integrating Donabedian’s framework, PDCA cycles, and SPC into assessment development transforms a traditionally linear process into a dynamic, quality-driven system. Together, these models introduce a structured yet flexible approach that emphasizes continuous improvement at every stage—from planning and design through administration and evaluation.

Donabedian’s model provides a conceptual lens to examine the assessment infrastructure (structure), development and delivery mechanisms (process), and outcomes (results). The PDCA cycle operationalizes this by embedding real-time, data-informed decision-making. SPC complements both by introducing statistical rigor, enabling teams to monitor performance metrics, detect anomalies, and reduce variation that could compromise test fairness or validity. This integrated approach fosters a culture of quality, ensuring rigorous process control, ongoing feedback loops, sustained validity and reliability of results, and ultimately, greater confidence among stakeholders.

Learn more about how ASC experts can assist your organization with assessment development.