Test Security

How Students Cheat in Online Exams and How to Catch Them

Cheating in exams has always been a major concern, even before the major shift to online proctored exams. According to a study done in 2007, 60.8% of college students involved admitted to cheating while a whooping 95% not getting caught! Heartbreaking! Right? Another article also revealed that even renowned institutions such as Harvard and Yale turn out to be fragile when it came to academic integrity. This is just but a tip of the iceberg

Proxy Test Takers: What are they, and how do we prevent them?

Proxy test takers are someone who takes a test on behalf of someone else, with the goal of doing better than the original examinee. This is a critical threat to test security, integrity, and validity.

Addressing Pre-Knowledge in Exam Cheating

In the realm of academic dishonesty and high-stakes exams such as Certification, the term “pre-knowledge” is an important concern in test security and validity. Understanding what pre-knowledge entails and its implications in exam cheating can

Best Online Proctoring Providers: The Alternative List You Need

There are many online proctoring providers on the market today, so how can you strategically shop for one? This post provides some considerations, and a list of most vendors, to help you with the process.

How do I develop a test security plan?

A test security plan (TSP) is a document that lays out how an assessment organization address security of its intellectual property, to protect the validity of the exam scores. If a test is compromised, the

Item Parameter Drift

Item parameter drift (IPD) refers to the phenomenon in which the parameter values of a given test item change over multiple testing occasions within the item response theory (IRT) framework. This phenomenon is often relevant

HR Assessment for Pre-Employment: Approaches and Solutions

HR assessment is a critical part of the HR ecosystem, used to select the best candidates with pre-employment testing, assess training, certify skills, and more. But there is a huge range in quality, as well

AI Remote Proctoring: How To Choose A Solution

AI remote proctoring has seen an incredible increase of usage during the COVID pandemic. ASC works with a very wide range of clients, with a wide range of remote proctoring needs, and therefore we partner

Paper-and-Pencil Testing: Still Around?

Paper-and-pencil testing used to be the only way to deliver assessments at scale. The introduction of computer-based testing (CBT) in the 1980s was a revelation – higher fidelity item types, immediate scoring & feedback, and

Seven Technology Hacks to Deliver Assessments More Securely

So, yeah, the use of “hacks” in the title is definitely on the ironic and gratuitous side, but there is still a point to be made: are you making full use of current technology to

Guttman Errors: Additional Insight into Examinees

Guttman errors are a concept derived from the Guttman Scaling approach to evaluating assessments. There are a number of ways that they can be used. Meijer (1994) suggests an evaluation of Guttman errors as a

Identifying Threats To Test Security

Test security is an increasingly important topic. There are several causes, including globalization, technological enhancements, and the move to a gig-based economy driven by credentials. Any organization that sponsors assessments that have any stakes tied

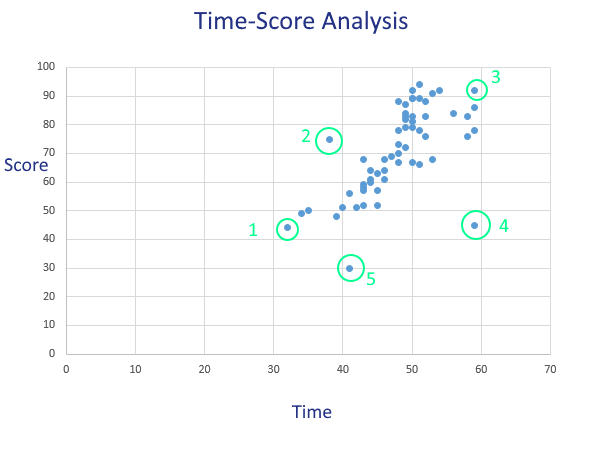

Flag Exam Cheating with Time-Score Analysis

Psychometric forensics is a surprisingly deep and complex field. Many of the indices are incredibly sophisticated, but a good high-level and simple analysis to start with is overall time vs. scores, which I call Time-Score Analysis.