IRT Scoring Spreadsheet

Use this free spreadsheet to learn how IRT scoring, also known as ability estimation or theta estimation, works.

Change item parameters or student responses and watch the graphs change in real time.

What is the IRT Scoring Spreadsheet?

Item Response Theory (IRT) is the measurement paradigm used by the majority of high-stakes testing programs across the world. Because it is very mathematically and conceptually complex, it still remains underutilized.

If you are new to IRT, one of the most important concepts to learn is scoring algorithms. The IRT Scoring Spreadsheet helps you learn this process interactively:

- Paste in item parameters and

- Paste in student responses (or type them one at a time)

- Watch the likelihood functions and theta estimates change in real time!

Best of all, this is available for free: simply fill out the form on the right and you can download the spreadsheet.

Our goal at Assessment Systems is to improve assessment by providing powerful, user-friendly software, which led to the development of systems like FastTest for item banking and adaptive testing and Xcalibre for IRT calibration. If you would like to apply the many advantages of IRT, check out those platforms or better yet, contact us.

Get in touch

What are item response theory and IRT scoring?

IRT operates by fitting machine learning models based on logistic regression, which estimates parameters for each item. There are various models to use, which differ in the parameters produced. Some work better for multiple choice items, some for rating scale items (Likert), some for essay questions, and so on.

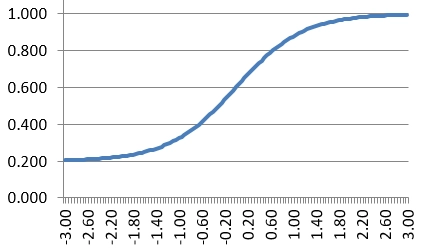

The graph here shows the item response function or item characteristic curve. You can see the relationship to the well-known logistic function. What does this say? Well, some fairly obvious things if you look closely. First, note that the x-axis is ability and the y-axis is the probability of getting a question correct. Low-ability examinees have a low probability, and high-ability examinees have a high probability. This means that the item measure what we want it to do, and therefore has discrimination. This particular item also has a lower asymptote, modeling the possibility that examinees can guess; if a 4-option multiple choice item, even the lowest examinees arguably have a 25% chance!

IRT also provides a number of even more important advantages over its older predecessor, Classical Test Theory. It tackles important topics like linking/equating or adaptive testing, far more effectively than CTT. For a free introductory textbook, check out the one by Frank Baker.

How does IRT scoring work?

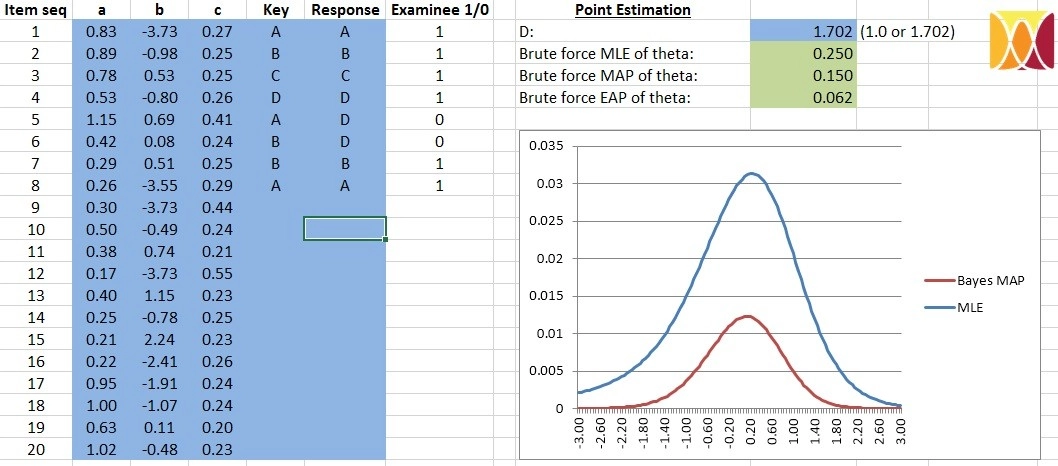

IRT uses these parameters for many important things in assessment, from evaluating item performance to test assembly. One important task is the scoring of examinees. Instead of scoring examinees on a particular test, it scores them directly on a latent trait or ability. Psychometricians call this theta. The IRT Scoring Spreadsheet provides an interactive way of learning how this process works.

If an examinee gets an item correct, they “get” the item response function. If they get it wrong, they “get” 1 minus the IRF (See curve above, flip it so that it slopes down). You then multiply the curves for every item that someone sees. This gets you a bell-shaped curve like you see on the right here. A person’s score is obtained by finding the highest point, and taking the x-axis value. Because a multiplication of probabilities is a likelihood and we are finding the highest point, this is called maximum likelihood.

Get in touch with one of our psychometricians to learn more.

Request a Consultation