Predictive Validity is a type of test score validity which evaluates how well a test predicts a future variable, and serves as evidence that the test is therefore useful for this purpose.

For instance, it is often used in the world of pre-employment testing, where we want a test to predict things like job performance or tenure, so that a company can hire people that do a good job and stay a long time – a very good result for the company, and worth the investment. It is also important in higher education, where admissions tests predict performance in the university, so the university can select students that are most likely to graduate.

What is test score validity and why is it important?

Validity, in a general sense, is evidence that we have to support intended interpretations of test scores. There are different types of evidence that we can gather to do so. Predictive validity refers to evidence that the test predicts things that it should predict. If we have quantitative data to support such conclusions, it makes the test more defensible and can improve the efficiency of its use. For example, if a university admissions test does a great job of predicting success at university, then universities will want to use it to select students that are more likely to succeed.

Examples of Predictive Validity

Predictive validity evidence can be gathered for a variety of assessment types.

- Pre-employment: Since the entire purpose of a pre-employment test is to positively predict good things like job performance or negatively predict bad things like employee theft or short tenure, a ton of effort goes into developing tests to function in this way, and then documenting that they do.

- University Admissions: Like pre-employment testing, the entire purpose of university admissions exams is predictive. They should positively correlate with good things (first year GPA, four year graduation rate) and negatively predict the negative outcomes like academic probation or dropping out.

- Prep Exams: Preparatory or practice tests are designed to predict performance on their target test. For example, if a prep test is designed to mimic the Scholastic Aptitude Test (SAT), then one way to validate it is to gather the SAT scores later, after the examinees take it, and correlate with the prep test.

- Certification & Licensure: The primary purpose of credentialing exams is not to predict job performance, but to ensure that the candidate has mastered the material necessary to practice their profession. Therefore, predictive validity is not important, compared to content-related validity such as blueprints based on a job analysis. However, some credentialing organizations do research on the “value of certification” linking it to improved job performance, reduced clinical errors, and often external third variables such as greater salary.

- Medical/Psychological: There are some assessments that are used in a clinical situation, and the predictive validity is necessary in that sense. For instance, there might be an assessment of knee pain used during initial treatment (physical therapy, injections) that can be predictively correlated with later surgery. The same assessment might then be used after the surgery to track rehabilitation.

Predictive Validity in Pre-employment Testing

The case of pre-employment testing is perhaps the most common use of this type of validity evidence. A new study (Sacket, Zhang, Berry, & Lievens, 2022) was recently released that was a meta-analysis of the various types of pre-employment tests and other selection procedures (e.g., structured interview), comparing their predictive validity power. This was a modern update to the classic article by Schmidt & Hunter (1998). While in the past the consensus has been that cognitive ability tests provide the best predictive power in the widest range of situations, the new article suggests otherwise. It recommends the use of structured interview and job knowledge tests, which are more targeted towards the role in question, and therefore not surprising that they are well-performing. This in turn suggests that you should not buy pre-fab ability tests and use them in a shotgun approach with the assumption of validity generalization, but instead leverage an online testing platform like FastTest that allows you to build high-quality exams that are more specific to your organization.

Why do we need predictive validity?

There are a number of reasons that you might need predictive validity for an exam. They are almost always regarding the case where the test is used to make important decisions about people.

- Smarter decision-making: Predictive validity provides valuable insights for decision-makers. It helps recruiters identify the most suitable candidates, educators tailor their teaching methods to enhance student learning, and universities to admit the best students.

- Legal defensibility: If a test is being used for pre-employment purposes, it is legally required in the USA to either show that the test is obviously job-related (e.g., knowledge of Excel for a bookkeeping job) or that you have hard data demonstrating predictive validity. Otherwise, you are open for a lawsuit.

- Financial benefits: Often, the reason for needing improved decisions is very financial. It is often costly for large companies to recruit and train personnel. It’s entirely possible that spending $100,000 per year on pre-employment tests could save millions of dollars in the long run.

- Benefits to the examinee: Sometimes, there is directly a benefit to the examinee. This is often the case with medical assessments.

How to implement predictive validity

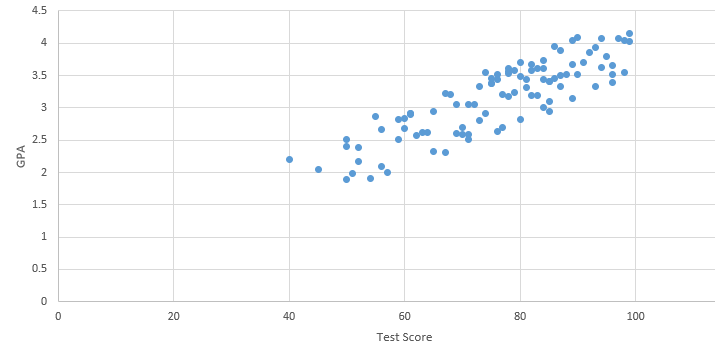

The simplest case is that of regression and correlation. How well does the test score correlate with the criterion variable? Below is a oversimplified example, of predicting university GPA from scores on an admissions test. Here, the correlation is 0.858 and the regression is GPA = 0.34*SCORE + 0.533. Of course, in real life, you would not see this strong of a predictive power, as there are many other factors which influence GPA.

Advanced Issues

It is usually not a simple situation of two straightforward variables, such as one test and one criterion variable. Often, there are multiple predictor variables (quantitative reasoning test, MS Excel knowledge test, interview, rating of the candidate’s resume), and moreover there are often multiple criterion variables (job performance ratings, job tenure, counterproductive work behavior). When you use multiple predictors and a second or third predictor adds some bit of predictive power over that of the first variable, this is known as incremental validity.

You can also implement more complex machine learning models, such as neural networks or support vector machines, if they fit and you have sufficient sample size.

When performing such validation, you need to also be aware of bias. There can be test bias where the test being used as a predictor is biased against a subgroup. There can also be predictive bias where two subgroups have the same performance on the test, but one is overpredicted for the criterion and the other is underpredicted. A rule of thumb for investigating this in the USA is the four-fifths rule.

Summary

Predictive validity is one type of test score validity, referring to evidence that scores from a certain test can predict their intended target variables. The most common application of it is to pre-employment testing, but it is useful in other situations as well. But validity is an extremely important and wide-ranging topic, so it is not the only type of validity evidence that you should gather.

Nathan Thompson earned his PhD in Psychometrics from the University of Minnesota, with a focus on computerized adaptive testing. His undergraduate degree was from Luther College with a triple major of Mathematics, Psychology, and Latin. He is primarily interested in the use of AI and software automation to augment and replace the work done by psychometricians, which has provided extensive experience in software design and programming. Dr. Thompson has published over 100 journal articles and conference presentations, but his favorite remains https://scholarworks.umass.edu/pare/vol16/iss1/1/ .