Assessment In The News

What Should Psychometrics Be Doing?

The Goal: Quality Assessment On March 31, 2017, I read an article in The Industrial-Organizational Psychologist (the journal published by the Society for Industrial Organizational Psychology) that really resonated with me: Has Industrial-Organizational Psychology Lost

Assess.ai Passes Audit for FISMA / FedRAMP

ASC is proud to announce that we have successfully passed an audit for FISMA / FedRAMP Moderate, demonstrating our extremely high security standards for our online assessment platform! FISMA and FedRAMP are both security protocols

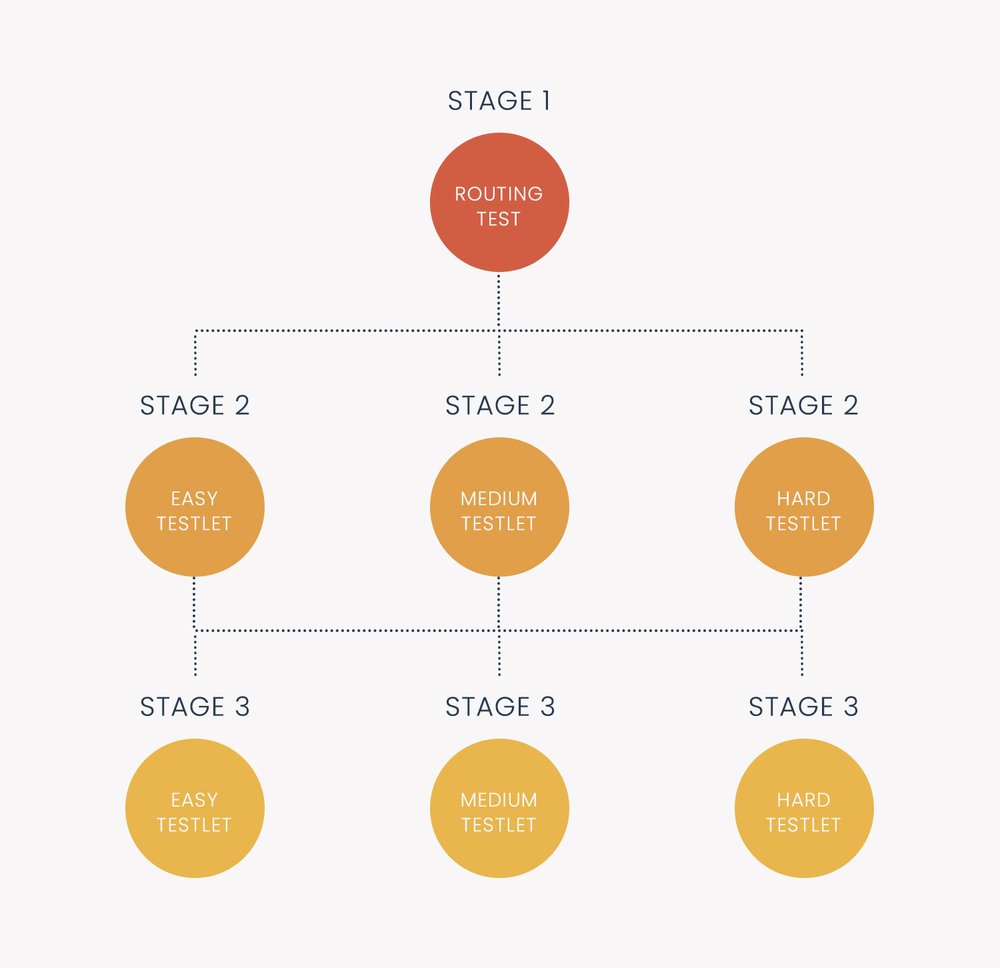

Adaptive Testing SAT: Intro & Free Practice Test

The adaptive SAT (Scholastic Aptitude Test) exam was announced in January 2022 by the College Board, with the goal to modernize the test and make it more widely available, migrating the exam from paper-and-pencil to

CalHR Selects Assessment Systems as Vendor for Personnel Selection

The California Department of Human Resources (CalHR, calhr.ca.gov/) has selected Assessment Systems Corporation (ASC, assess.com) as its vendor for an online assessment platform. CalHR is responsible for the personnel selection and hiring of many job roles for

Learn About Tech Innovation With Dr. Nathan Thompson On The EdNorth EdTech Podcast

Nathan Thompson, Ph.D., was recently invited to talk about ASC and the future of educational assessment on the Ednorth EdTech Podcast.EdNorth is an association dedicated to continuing the long history of innovation in educational technology