The topic of test security is an emerging field within the assessment industry. Test fraud is one of the most salient threats to score validity, so methods to combat it are extremely important to all of us. So important, in fact, that an annual conference has been established that is devoted to the topic: the Conference on Test Security (COTS).

The 2015 Conference on Test Security

The 2015 edition of Conference on Test Security was hosted by the Center for Educational Testing and Evaluation at the University of Kansas. Assessment Systems had the privilege to present two full sessions: One Size Does Not Fit All: Making Test Security Configurable and Scalable and Let’s Rethink How Technology Can Improve Proctoring. Abstracts for these are below; if you would like to learn more, please contact us. Additionally, we had the opportunity for a product demonstration of our upcoming data forensics software, SIFT (more on that below).

The Conference on Test Security kicked off with a keynote on the now-famous Atlanta cheating scandal. This scandal was unique in that it was systematic and top-down rather than bottom-up. The follow-up message was a stressing of the difference between assessment and accountability; because a test is tied to accountability standards does not mean the test in itself is bad, or that all testing is bad.

One of the most commonly presented topics at the conference is data forensics. In fact, the Conference on Test Security used to be call the Conference on Statistical Detection of Test Fraud. But while there has been research on statistical detection of test fraud for more than 50 years, it is effectively a much younger topic and we are still learning a lot. Moreover, there are no good software programs that are publicly available to help organizations implement best practices in data forensics. This is where SIFT comes in.

What is Data Forensics?

In the realm of test security, data forensics refers to analysis of data to find evidence of various types of test fraud. There are a few big types, and the approach to analysis can be quite different. Here are some descriptions, though this is far from a complete treatment of the topic!

Answer-changing: In Atlanta, teachers and administrators would change answers on student bubble sheets after the test was turned in. This involves quantification of the changes, and then analysis of right-to-wrong vs. wrong-to-right changes, amongst other things. This, of course, is primarily relevant for paper-based tests, but some answer-changing can happen on computer-based tests.

Preknowledge: If an examinee purchases a copy of the test off the internet from an illegal “brain dump” website, they will get a surprisingly high score while taking less time than expected. This could be on all items or a subset.

Item harvesting: An examinee is paid to memorize as many items as they can. They might spend 5 minutes each on the first 15 items and not even look at the remainder.

Collusion: The age-old issue of Student A copying off Student B is collusion, but it can also involve multiple students and even teachers or other people. Statistical analysis looks at unusual patterns in the response data.

How can I implement some of this myself?

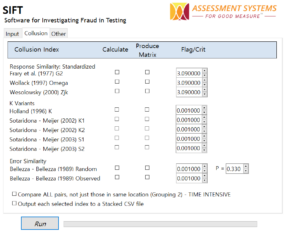

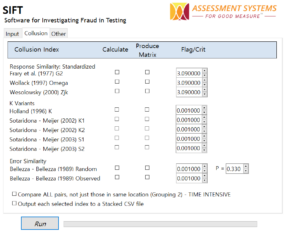

Unfortunately, there is no publicly available software that is adequately devoted to data forensics. Existing software is very limited in the analysis it provides and/or its usability. For example, there are some packages available in the R programming language, but you need to learn to program in R! Therefore Assessment Systems has developed our own system, entitled Software for Investigating Test Fraud (SIFT), to meet this market need.

SIFT will provide a wide range of analysis, including a number of collision indices (see the first six on the left; we will do more!), flagging of possible preknowledge or item harvesting, unusual time patterns, etc. It will also aggregate the analyses up a few levels; for example flagging test centers or schools that have unusually high numbers of students with unusually high collusion or time pattern flags.

A beta version will be available in December, with a full version available in 2016. If you are interested, please contact us!

Presentation Abstracts

One Size Does Not Fit All: Making Test Security Configurable and Scalable

Development of an organization’s test security plan involves many choices, an important aspect of which is the test development, publishing, and delivery process. Much of this process is now browser-based for many organizations. While there are risks involved with this approach, it provides much more flexibility and control for organizations, plus additional advantages such as immediate republishing. This is especially useful because different programs/tests within an organization might vary widely. It is therefore ideal to have an assessment platform that maximizes the configurability security.

This presentation will provide a model to evaluate security risks, determine relevant tactics, and design your delivery solution by configuring test publishing/delivery option around these tactics to ensure test integrity. Key configurations include:

- Regular browser vs. lockdown browser

- No proctor, webcam proctor, or live proctor

- Login processes such as student codes, proctor codes, and ID verification

- Delivery approach: linear, LOFT, CAT

- Practical constraints like setting delivery windows, time limits, and allowing review

- Complete event tracking during the exam

- Data forensics within the system.

In addition, we invite attendees to discuss technological approaches they have taken to addressing test security risks, and how they fit into the general model.

Let’s Rethink How Technology Can Improve Proctoring

Technology has revolutionized much of assessment. However, a large proportion of proctoring is still done the same way it was 30 years ago. How can we best leverage technology to improve test security by improving the proctoring of an assessment? Much of this discussion revolves around remote proctoring (RP), but there are other aspects. For example, consider a candidate focusing on memorizing 10 items: can this be better addressed by real-time monitoring of irregular response times with RP than by a single in-person proctor on the other side of the room? Or by LOFT/CAT delivery?

This presentation discusses the security risks and validity threats that are intended to be addressed by proctors and how they might be instead addressed by technology in some way. Some of the axes of comparison include:

- Confirming ID of examinee

- Provision of instructions

- Confirmation of clean test area with only allowed materials

- Monitoring of examinee actions during test time

- Maintaining standardized test environment

- Protecting test content

- Monitoring irregular time patterns

In addition, we can consider how we can augment the message of deterrence with tactics like data forensics, strong agreements, possibility of immediate test shutdown, and more secure delivery methods like LOFT.

Contact Us To Get Assessment Solution