Credentialing

Certification Management System: Streamline Candidate Credentialing

A Certification Management System (CMS) or Credential Management System plays a pivotal role in streamlining the key processes surrounding the certification or credentialing of people, namely that they have certain knowledge or skills in a

What is a Microcredential? How are they modernizing workforce development?

Microcredentials are short, focused, and targeted educational or assessment-based certificate programs that offer learners a way to acquire specific skills or knowledge in a particular field. In today’s fast-paced and rapidly evolving job market, traditional

Testing and Certification Platform: The Foundation for Credentialing

A testing and certification platform provides an efficient, scalable, and secure solution for managing the development of credentialing exams, delivering them securely, and automating the management of business aspects. It helps to ensure competency of

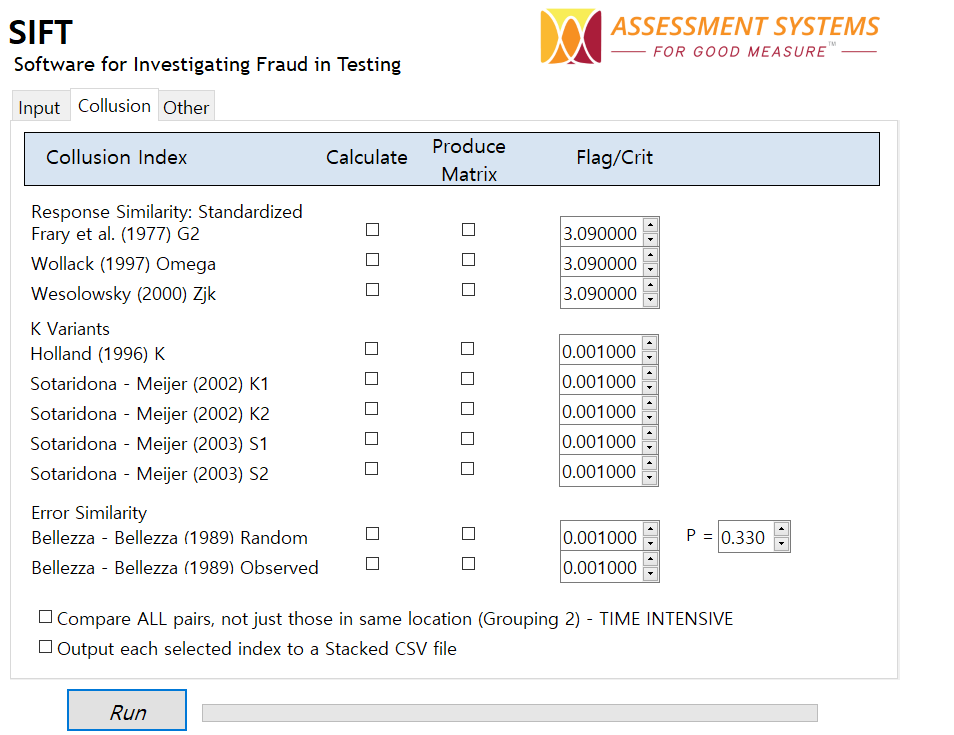

SIFT: A new tool for detection of test fraud

Introduction Test fraud is an extremely common occurrence. We’ve all seen articles about examinee cheating. However, there are very few defensible tools to help detect it. I once saw a webinar from an online testing

What is an Assessment-Based Certificate?

An assessment-based certificate (ABC) is a type of credential that is smaller and more focused than a Certification, License, or Degree. ABCs serve a distinct role in education and workforce development by providing a formal

Certification vs. Licensure Exams: Differences and Examples

Certification vs licensure refers to the organization that runs a credentialing exam, usually nonprofit vs government, and whether it is legally required. These are terms that are used quite frequently to refer to credentialing examinations

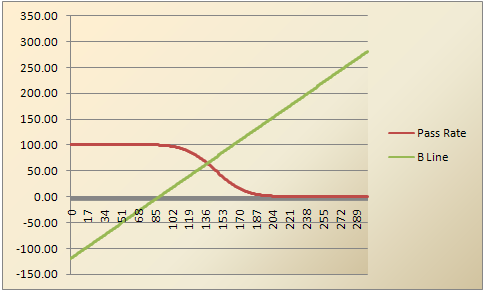

Addressing Pre-Knowledge in Exam Cheating

In the realm of academic dishonesty and high-stakes exams such as Certification, the term “pre-knowledge” is an important concern in test security and validity. Understanding what pre-knowledge entails and its implications in exam cheating can

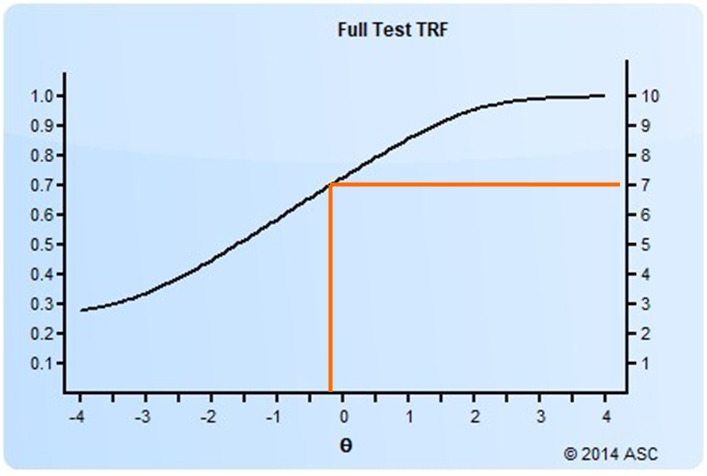

Setting a Cutscore to Item Response Theory

Setting a cutscore on a test scored with item response theory (IRT) requires some psychometric knowledge. This post will get you started. How do I set a cutscore with item response theory? There are two

Modified-Angoff Method Study

A modified-Angoff method study is one of the most common ways to set a defensible cutscore on an exam. It therefore means that the pass/fail decisions made by the test are more trustworthy than if

What is Test Scaling?

Scaling is a psychometric term regarding the establishment of a score metric for a test, and it often has two meanings. First, it involves defining the method to operationally scoring the test, establishing an underlying

How to develop and roll out a Certification Test

Certification test development refers to the process of building an exam in accordance to international standards like NCCA, then delivering it securely. It is a critical business need for credentialing organizations and awarding bodies. As

Ace Your Exam: Strategies for Test Preparation

Test preparation for a high-stakes exam can be a daunting task, as obtaining degrees, certifications, and other significant achievements can accelerate your career and present new opportunities to learn in your chosen field of study.

What are Stackable Credentials?

Stackable credentials refer to the practice of accumulating a series of credentials such as certifications or microcredentials over time. In today’s rapidly evolving job market, staying ahead of the curve is crucial for career success. Employers

Digital Badges for Skills and Credentials

Digital badges (aka e-badges) have emerged in today’s digitalized world as a powerful tool for recognizing and showcasing individual’s accomplishments in an online format which is more comprehensive, immediate, brandable, and easily verifiable compared to

ANSI ISO/IEC 17024 Accreditation for Certifications

ANSI ISO/IEC 17024 accreditation is an internationally recognized standard for the accreditation of personnel certification bodies. That is, it is a stamp of approval from an independent audit which says your certification is good quality.

Ebel Method of Standard Setting

The Ebel method of standard setting is a psychometric approach to establish a cutscore for tests consisting of multiple-choice questions. It is usually used for high-stakes examinations in the fields of higher education, medical and health

What is a Cutscore or Passing Point?

A cutscore or passing point (aka cut-off score and cutoff score as well) is a score on a test that is used to categorize examinees. The most common example of this is pass/fail, which we

Nedelsky Method of Standard Setting

The Nedelsky method is an approach to setting the cutscore of an exam. Originally suggested by Nedelsky (1954), it is an early attempt to implement a quantitative, rigorous procedure to the process of standard setting.