Education

Assessment Development: Using Quality Management Models

Discover how proven healthcare quality management models can improve fairness and reliability in assessments. I spent the first decade of my career in healthcare, specifically in healthcare quality management. Healthcare is ‘high stakes’; medical errors

AI in Teaching & Learning 2025: Personalized Learning, Smarter Tests, Stronger Guardrails

Why “AI in Education” Matters in 2025 AI in education has moved from experimentation to daily application. Whether you’re a school administrator, instructional designer, or classroom teacher, artificial intelligence offers three transformative benefits: time-saving automation,

Diagnostic Assessment: The Key to Smarter Learning Strategies

Imagine you are a coach preparing your team for the championship. Would you train them all the same way, or would you assess their strengths and weaknesses first? Just like in sports, effective education starts

¿Qué son los Modelos de Diagnóstico Cognitivo?

¿Qué son los Modelos de Diagnóstico Cognitivo? Los modelos de diagnóstico cognitivo (CDMs, por sus siglas en inglés) son un marco psicométrico diseñado para mejorar la estructura y la puntuación de los exámenes. En lugar

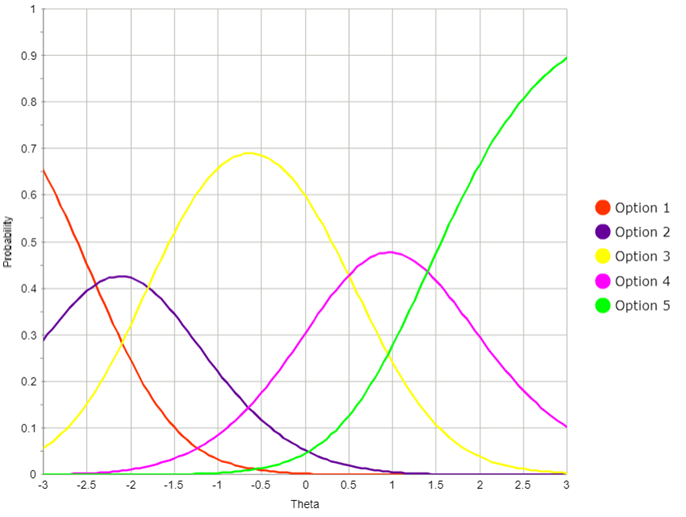

What are Cognitive Diagnostic Models?

What Are Cognitive Diagnostic Models? Cognitive diagnostic models (CDMs) are a psychometric framework designed to enhance how tests are structured and scored. Instead of providing just an overall test score, CDMs aim to generate a

¿Qué es una rúbrica?

¿Qué es una rúbrica? Una rúbrica es un conjunto de reglas que convierte respuestas no estructuradas en evaluaciones (como ensayos) en datos estructurados que pueden ser analizados psicométricamente. Ayuda a los educadores a evaluar trabajos

What is a rubric?

What is a Rubric? A rubric is a set of rules for converting unstructured responses on assessments—such as essays—into structured data that can be analyzed psychometrically. It helps educators evaluate qualitative work consistently and fairly.

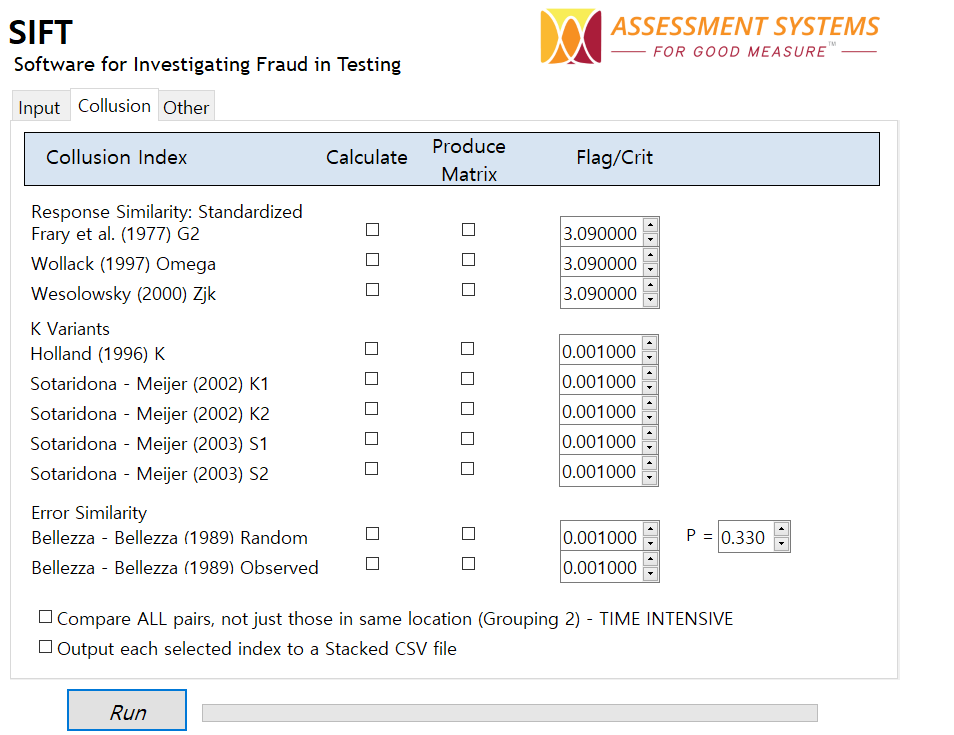

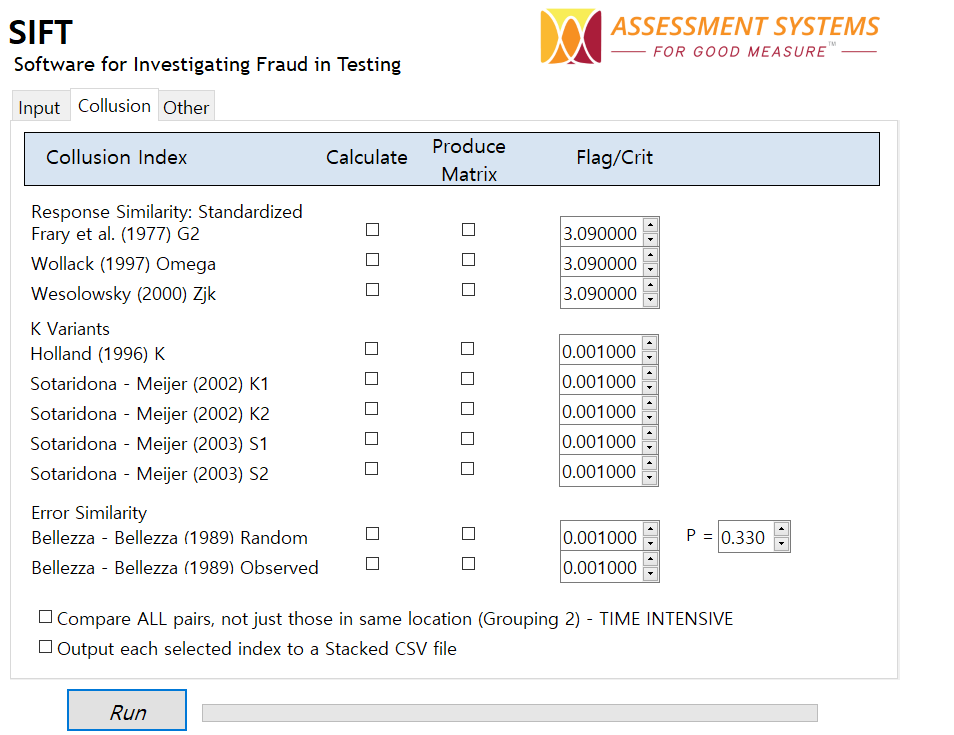

SIFT: Una Nueva Herramienta para la Detección de Fraude en Pruebas

Introducción El fraude en pruebas es un fenómeno extremadamente común. Todos hemos visto artículos sobre trampas en exámenes. Sin embargo, existen muy pocas herramientas defendibles para ayudar a detectarlo. Una vez vi un seminario web

SIFT: A new tool for detection of test fraud

Introduction Test fraud is an extremely common occurrence. We’ve all seen articles about examinee cheating. However, there are very few defensible tools to help detect it. I once saw a webinar from an online testing

Digital Literacy Assessment and its Role in Modern Education

Digital literacy assessments are a critical aspect of modern educational and workforce development initiatives, given today’s fast-paced and technology-driven world, where digital literacy is essential in one’s education, occupation, and even in daily life. Defined

Speeded Test vs Power Test

The concept of Speeded vs Power Test is one of the ways of differentiating psychometric or educational assessments. In the context of educational measurement and depending on the assessment goals and time constraints, tests are

Learning Management System (LMS): The Key to Online Learning and Training

A Learning Management System (LMS) is a software platform which is essential to the delivery of structured learning material in our digital-first world. The purpose is to deliver educational content to learners for any organization

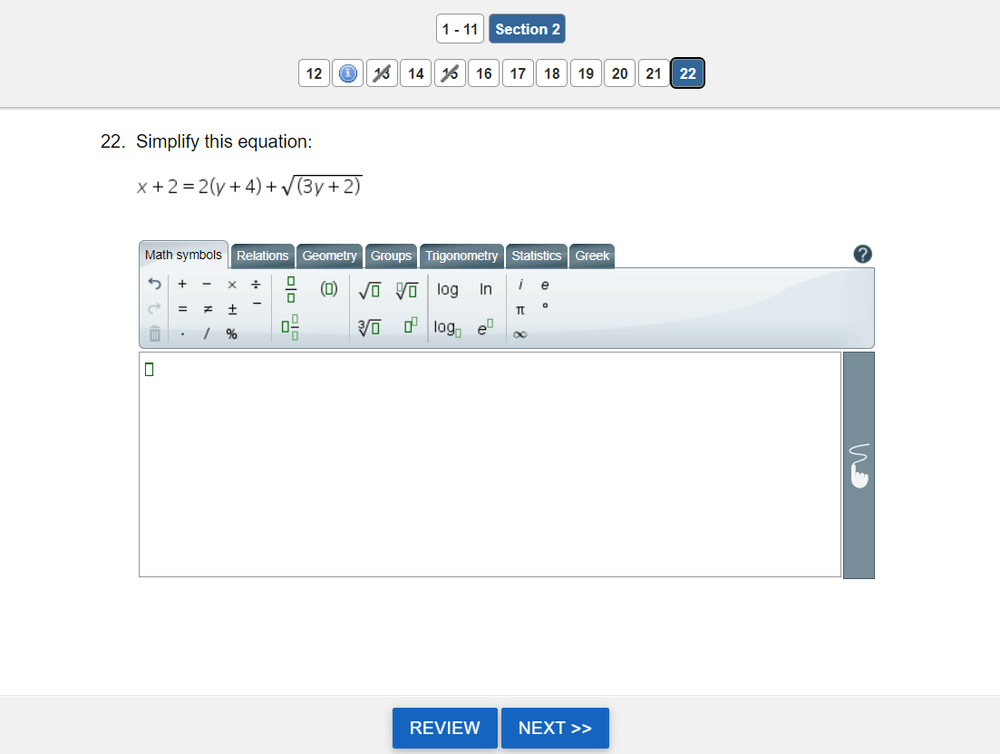

What are technology enhanced items?

Technology-enhanced items are assessment items (questions) that utilize technology to improve the interaction of a test question in digital assessment, over and above what is possible with paper. Tech-enhanced items can improve examinee engagement (important

Why PARCC EBSR Items Provide Bad Data

The Partnership for Assessment of Readiness for College and Careers (PARCC) is a consortium of US States working together to develop educational assessments aligned with the Common Core State Standards. This is a daunting task,

What is Test Scaling?

Scaling is a psychometric term regarding the establishment of a score metric for a test, and it often has two meanings. First, it involves defining the method to operationally scoring the test, establishing an underlying

Ace Your Exam: Strategies for Test Preparation

Test preparation for a high-stakes exam can be a daunting task, as obtaining degrees, certifications, and other significant achievements can accelerate your career and present new opportunities to learn in your chosen field of study.

What is Automated Essay Scoring?

Automated essay scoring (AES) is an important application of machine learning and artificial intelligence to the field of psychometrics and assessment. In fact, it’s been around far longer than “machine learning” and “artificial intelligence” have

Artificial Intelligence (AI) in Education & Assessment: Opportunities and Best Practices

Artificial intelligence (AI) is poised to address some challenges that education deals with today, through innovation of teaching and learning processes. By applying AI in education technologies, educators can determine student needs more precisely, keep