Test security is an increasingly important topic. There are several causes, including globalization, technological enhancements, and the move to a gig-based economy driven by credentials. Any organization that sponsors assessments that have any stakes tied to them must be concerned with security, as the greater the stakes, the greater the incentive to cheat. And threats to test security are also threats to validity, and therefore the entire existence of the assessment.

The core of this protection is a test security plan, which will be discussed elsewhere. The first phase is an evaluation of your current situation. I will present a suggested model for that here. There are five steps in this model.

1. Identify threats to test security that are relevant to your program.

2. Evaluate the possible frequency and impact of each threat.

3. Determine relevant deterrents or preventative measures for each threat.

4. Identify data forensics that might detect issues.

5. Have a plan for how to deal with issues, like a candidate found cheating.

OK, Explain These Five Steps More Deeply

1. Identify threats to test security that are relevant to your program.

Some of the most commonly encountered threats are listed below. Determine which ones might be relevant to your program, and brainstorm additional threats if necessary. If your organization has multiple programs, this list can differ between them.

-Brain dump makers (content theft)

-Brain dump takers (pre-knowledge)

-Examinee copying/collusion

-Outside help at an individual level (e.g., parent or friend via wireless audio)

-Outside help at a group level (e.g., teacher providing answers to class)

2. Evaluate the possible frequency and impact of each threat.

Create a table with three columns. The first is the list of threats and the latter two are Frequency, and Impact, where you can rate them, such as on a scale of 1 to 5. See examples below. Again, if your organization has multiple assessments, this can vary substantially amongst them. Brain dumps might be a big problem for one program but not another. I recommend multiplying or summing the values into a common index, which you might call criticality.

3. Determine relevant proactive measures for each threat.

Start with the most critical threats. Brainstorm policies or actions that could either deter that threat, mitigate its effects, or prevent it outright. Consider a cost/benefit analysis for implementing each. Determine which you would like to put in place, in a prioritized manner.

4. Identify data forensics that might detect issues.

The adage of “An ounce of prevention is worth a pound of cure” is cliché in the field of test security, so it is certainly worth minding. But there will definitely be test security threats which will impact you no matter how many proactive measures you put into place. In such cases, you also need to consider which data forensic methods you might use to look for evidence of those threats occurring. There are wide range of such analyses – here is a blog post that talks about some.

5. Have a plan for how to deal with issues, like a candidate found cheating.

This is an essential component of the test security plan. What will you do if you find strong evidence of students coping off each other, or candidates using a brain dump site?

Note how this methodology is similar to job analysis, which rates job tasks or KSAs on their frequency and criticality/importance, and typically multiplies those values and then ranks or sorts the tasks based on the total value. This is a respected methodology for studying the nature of work, so much so that it is required to be the basis of developing a professional certification exam, in order to achieve accreditation. More information is available here.

What can I do about these threats to test security?

There are four things you can do to address threats to test security, as was implicitly described above:

1. Prevent – In some situations, you might be able to put measures in place that fully prevent the issue from occurring. Losing paper exam booklets? Move online. Parents yelling answers in the window? Hold the test in a location with no parents allowed.

2. Deter – In most cases, you will not be able to prevent the threat outright, but you can deter it. Deterrents can be up front or after the fact. An upfront deterrent would be a proctor present during the exam. An after-the-fact deterrent would be the threat of a ban from practicing in a profession if you are caught cheating.

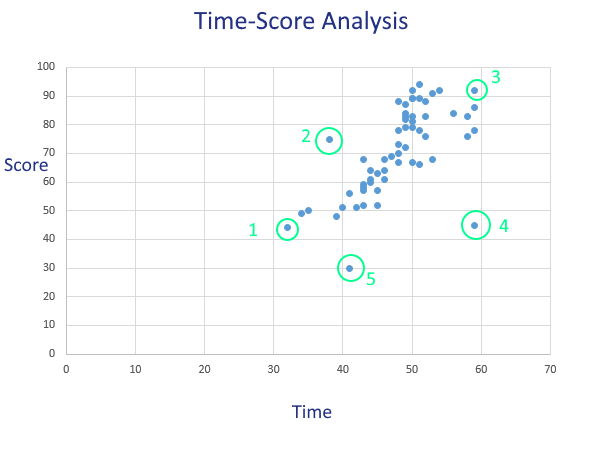

3. Detect – You can’t control all aspects of delivery. Fortunately, there are a wide range of data forensic approaches you can use to detect anomalies. This is not necessarily limited to test security though; low item response times could be indicative of pre-knowledge or simply of a student that doesn’t care.

4. Mitigate – Put procedures into place that reduce the effect of the threat. Examinees stealing your items? You can frequently rotate test forms. Examinees might still steal but at least items are only out for 3 months instead of 5 years, for example.

The first two pieces are essential components of standardized testing. The standardized in that phrase does not refer to educational standards, but rather to the fact that we are making the interaction of person with test as uniform as possible, as we want to remove as many outside variables as possible that could potentially affect test scores.

Examples

This first example is for an international certification. Such exams are very high stakes and therefore require many levels of security.

| Test | Risk (1-5) | Notes | Result |

| Content theft | 5 | Huge risk of theft; expensive to republish | Need all the help we can get. Thieves can make real money by stealing our content. We will have in-person proctoring in high-security centers, and also use a lockdown browser. All data will be analyzed with SIFT. |

| Pre-knowledge | 5 | Lots of brain dump sites | We definitely need safeguards to deter use of brain dump sites. We search the web to find sites and issue DMCA takedown notices. We analyze all candidate data to compare to brain dumps. Use Trojan Horse items. |

| Proxy testers | 3 | Our test is too esoteric | We need basic procedures in place to ensure identity, but will not spend big bucks on things like biometrics. |

| Proctor influence | 3 | Proctors couldn’t help much but they could steal content | Ensure that all proctors are vetted by a third party such as our delivery vendor. |

Now, let’s assume that the same organization also delivers a practice exam for this certification, which obviously has much lower security.

| Test | Risk (1-5) | Notes | Result |

| Content theft | 2 | You don’t want someone to steal the items and sell them, but it is not as big a deal as the Cert; cheap to republish | Need some deterrence but in-person proctoring is not worth the investment. Let’s use a lockdown browser. |

| Pre-knowledge | 1 | No reason to do this; actually hurts candidate | No measures |

| Proxy testers | 1 | Why would you pay someone else to take your practice test? Actually hurts candidate. | No measures |

| Proctor influence | 1 | N/A | No measures |

It’s an arms race!

Because test security is an ongoing arms race, you will need to periodically re-evaluate using this methodology, just like certifications are required to re-perform a job analysis study every few years because professions can change over time. New threats may present themselves while older ones fall by the wayside.

Of course, the approach discussed here is not a panacea, but it is certainly better than haphazardly putting measures in place. One of my favorite quotes is “If you aim at nothing, that’s exactly what you will hit.” If you have some goal and plan in mind, you have a much greater chance of success in minimizing threats to test security than if your organization simply puts the same measures in place for all programs without comparison or evaluation.

Interested in test security as a more general topic? Attend the Conference on Test Security.