Technology-enhanced items are assessment items (questions) that utilize technology to improve the interaction of a test question in digital assessment, over and above what is possible with paper. Tech-enhanced items can improve examinee engagement (important with K12 assessment), assess complex concepts with higher fidelity, improve precision/reliability, and enhance face validity/sellability.

To some extent, the last word is the key one; tech-enhanced items simply look sexier and therefore make an assessment platform easier to sell, even if they don’t actually improve assessment. I’d argue that there are also technology-enabled items, which are distinct, as discussed below.

What is the goal of technology enhanced items?

The goal is to improve assessment, by increasing things like reliability/precision, validity, and fidelity. However, there are a number of TEIs that is actually designed more for sales purposes than psychometric purposes. So, how to know if TEIs improve assessment? That, of course, is an empirical question that is best answered with an experiment. But let me suggest one metric address this question: how far does the item go beyond just reformulating a traditional item format to use current user-interface technology? I would define the reformulating of traditional format to be a fake TEI while going beyond would define a true TEI.

An alternative nomenclature might be to call the reformulations technology-enhanced items and the true tech usage to be technology-enabled items (Almond et al, 2010; Bryant, 2017), as they would not be possible without technology.

A great example of this is the relationship between a traditional multiple response item and certain types of drag and drop items. There are a number of different ways that drag and drop items can be created, but for now, let’s use the example of a format that asks the examinee to drag text statements into a box.

An example of this is K12 assessment items from PARCC that ask the student to read a passage, then ask questions about it.

The item is scored with integers from 0 to K where K is the number of correct statements; the integers are often then used to implement the generalized partial credit model for final scoring. This would be true regardless of whether the item was presented as multiple response vs. drag and drop. The multiple response item, of course, could just as easily be delivered via paper and pencil. Converting it to drag and drop enhances the item with technology, but the interaction of the student with the item, psychometrically, remains the same.

Some True TEIs, or Technology Enabled Items

Of course, the past decade or so has witnessed stronger innovation in item formats. Gamified assessments change how the interaction of person and item is approached, though this is arguably not as relevant for high stakes assessment due to concerns of validity. There are also simulation items. For example, a test for a construction crane operator might provide an interface with crane controls and ask the examinee to complete a tasks. Even at the K-12 level there can be such items, such as the simulation of a science experiment where the student is given various test tubes or other instruments on the screen.

Both of these approaches are extremely powerful but have a major disadvantage: cost. They are typically custom-designed. In the case of the crane operator exam or even the science experiment, you would need to hire software developers to create this simulation. There are now some simulation-development ecosystems that make this process more efficient, but the items still involve custom authoring and custom scoring algorithms.

To address this shortcoming, there is a new generation of self-authored item types that are true TEIs. By “self-authored” I mean that a science teacher would be able to create these items themselves, just like they would a multiple choice item. The amount of technology leveraged is somewhere between a multiple choice item and a custom-designed simulation, providing a compromise of reduced cost but still increasing the engagement for the examinee. A major advantage of this approach is that the items do not need custom scoring algorithms, and instead are typically scored via point integers, which enables the use of polytomous item response theory.

Are we at least moving forward? Not always!

There is always pushback against technology, and in this topic the counterexample is the gridded item type. It actually goes in reverse of innovation, because it doesn’t take a traditional format and reformulate it for current UI. It actually ignores the capabilities of current UI (actually, UI for the past 20+ years) and is therefore a step backward. With that item type, students are presented a bubble sheet from a 1960s style paper exam, on a computer screen, and asked to fill in the bubbles by clicking on them rather than using a pencil on paper.

Another example is the EBSR item type from the artist formerly known as PARCC. It was a new item type that intended to assess deeper understanding, but it did not use any tech-enhancement or -enablement, instead asking two traditional questions in a linked manner. As any psychometrician can tell you, this approach ignored basic assumptions of psychometrics, so you can guess the quality of measurement that it put out.

How can I implement TEIs?

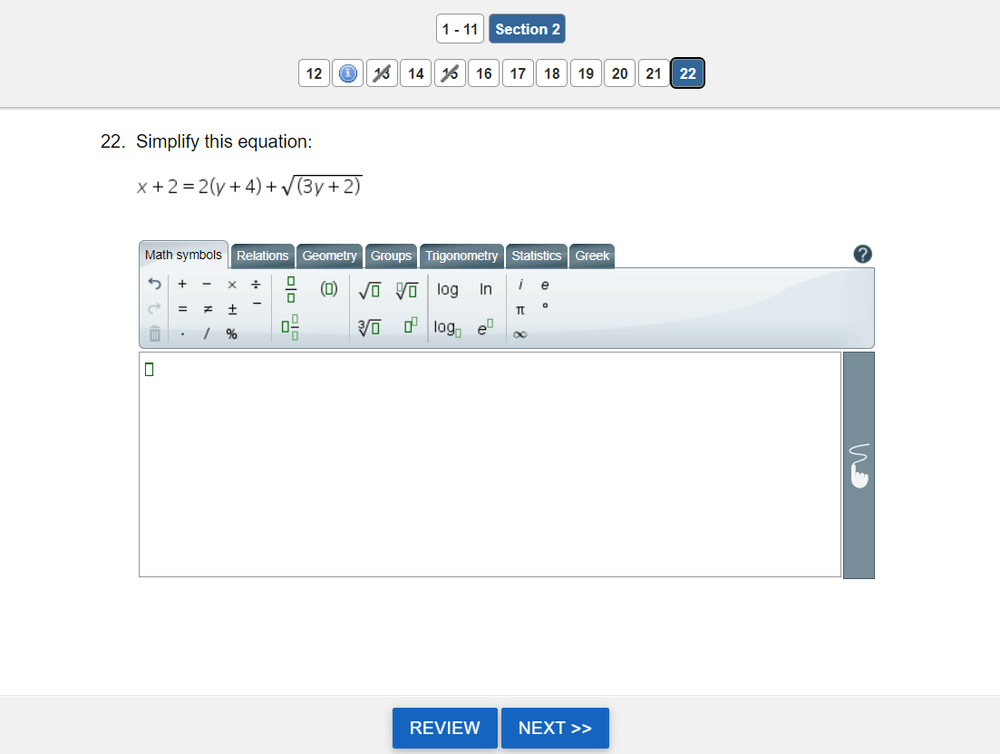

It takes very little software development expertise to develop a platform that supports multiple choice items. An item like the graphing one above, though, takes substantial investment. So there are relatively few platforms that can support these, especially with best practices like workflow item review or item response theory.

Nathan Thompson earned his PhD in Psychometrics from the University of Minnesota, with a focus on computerized adaptive testing. His undergraduate degree was from Luther College with a triple major of Mathematics, Psychology, and Latin. He is primarily interested in the use of AI and software automation to augment and replace the work done by psychometricians, which has provided extensive experience in software design and programming. Dr. Thompson has published over 100 journal articles and conference presentations, but his favorite remains https://scholarworks.umass.edu/pare/vol16/iss1/1/ .