Distractor analysis refers to the process of evaluating the performance of incorrect answers vs the correct answer for multiple choice items on a test. It is a key step in the psychometric analysis process to evaluate item and test performance as part of documenting test reliability and validity.

What is a distractor?

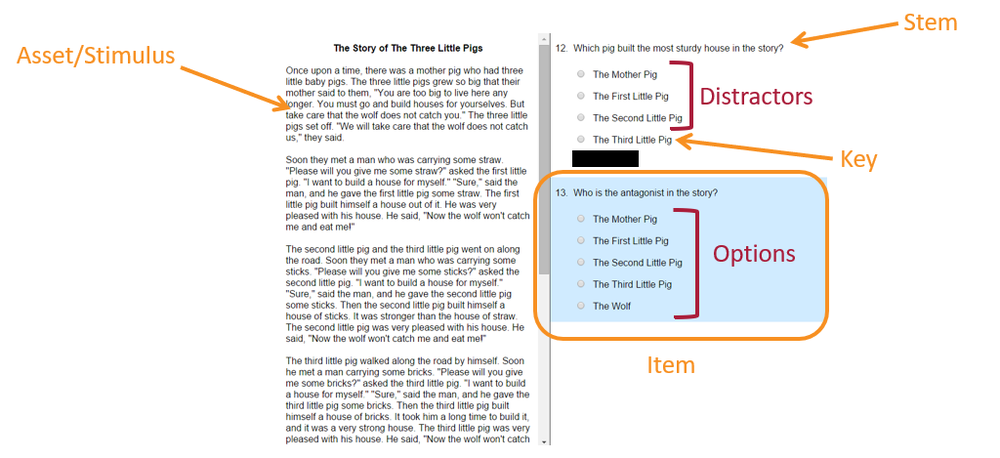

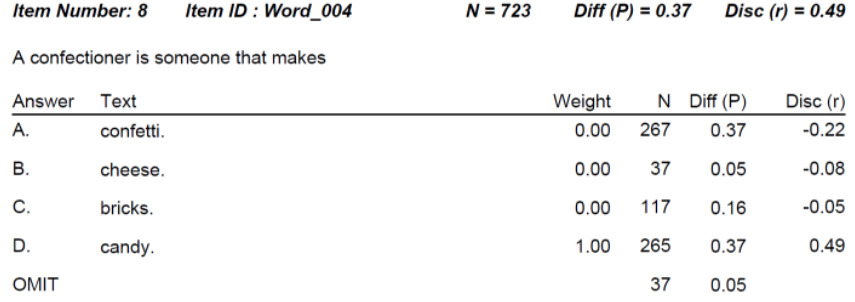

An item distractor, also known as a foil or a trap, is an incorrect option for a selected-response item on an assessment. Multiple-choice questions always have a few options for an answer, one of which is a key/correct answer, and the remaining ones are distractors/wrong answers. It is worth noting that distractors should not be just any wrong answers but have to be probable answers in case an examinee makes a mistake when looking for a right option. In short, distractors are feasible answers that a examinee might select when making misjudgments or having partial knowledge/understanding. A great example is later in this article with the word “confectioner.”

What makes a good item distractor?

One word: plausibility. We need the item distractor to attract examinees. If it is so irrelevant that no one considers it, then it does not do any good to include it in the item. Consider the following item.

What is the capital of the United States of America?

A. Los Angeles

B. New York

C. Washington, D.C.

D. Mexico City

The last option is quite implausible – not only is it outside the USA, but it mentions another country in the name, so no student is likely to select this. This then becomes a three-horse race, and students have a 1 in 3 chance of guessing. This certainly makes the item easier. How much do distractors matter? Well, how much is the difficulty affected by this new set?

What is the capital of the United States of America?

A. Paris

B. Rome

C. Washington, D.C.

D. Mexico City

In addition, the distractor needs to have negative discrimination. That is, while we want the correct answer to attract the more capable examinees, we want the distractors to attract the lower examinees. If you have a distractor that you thought was incorrect, and it turns out to attract all the top students, you need to take a long, hard look at that question! To calculate discrimination statistics on distractors, you will need software such as Iteman.

What makes a bad item distractor?

Obviously, implausibility and negative discrimination are frequent offenders. But if you think more deeply about plausibility, the key is actually plausibility without being arguably correct. This can be a fine line to walk, and is a common source of problems for items. You might have a medical item that presents a scenario and asks for a likely diagnosis; perhaps one of the distractors is very unlikely so as to be essentially implausible, but it might actually be possible for a small subset of patients under certain conditions. If the author and item reviewers did not catch this, the examinees probably will, and this will be evident in the statistics. This is one of the reasons it is important to do psychometric analysis of test results, including distractor analysis to evaluate the effectiveness of incorrect options in multiple-choice questions. In fact, accreditation standards often require you to go through this process at least once a year.

Why do we need a distractor analysis?

After a test form is delivered to examinees, distractor analysis should be implemented to make sure that all answer options work well, and that the item is performing well and defensibly. For example, it is expected that around 40-95% of students pick a correct answer, and the distractors will be chosen by the smaller number of examinees compared to the number chosen the key with approximately equal distribution of choices. Distractor analysis is usually done with classical test theory, even if item response theory is used for scoring, equating, and other tasks.

How to do a distractor analysis

There are three main aspects:

- Option frequencies/proportions

- Option point-biserial

- Quantile plot

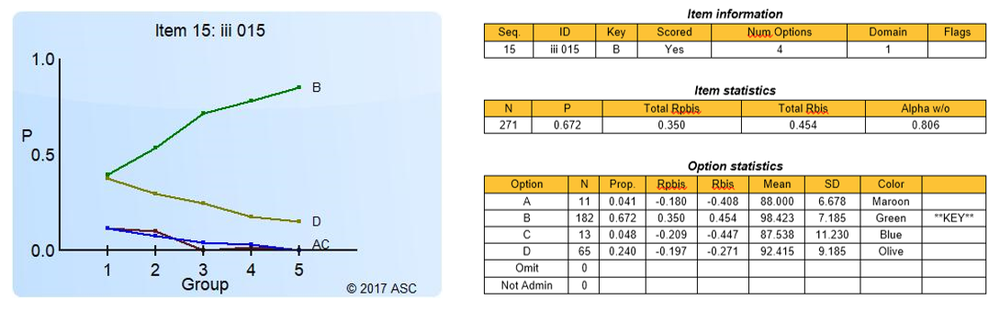

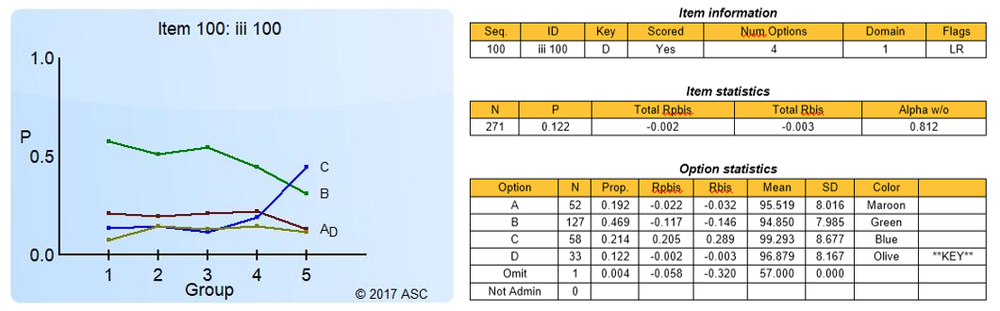

The option frequencies/proportions just refers to the analysis of how many examinees selected each answer. Usually it is a proportion and labeled as “P.” Did 70% choose the correct answer while the remaining 30% were evenly distributed amongst the 3 distractors? Great. But if only 40% chose the correct answer and 45% chose one of the distractors, you might have a problem on your hands. Perhaps the answer specified as the Key was not actually correct. The point-biserials (Rpbis) will help you evaluate if this is the case. The point-biserial is an item-total correlation, meaning that we correlate scores on the item with the total score on the test, which is a proxy index of examinee ability. If 0.0, there is no relationship, which means the item is not correlated with ability, and therefore probably not doing any good. If negative, it means that the lower-ability students are selecting it more often; if positive, it means that the higher-ability students are selecting it more often. We want the correct answer to have a positive value and the distractors to have a negative value. This is one of the most important points in determining if the item is performing well. In addition, there is a third approach, which is visual, called the quantile plot. It is very useful for diagnosing how an item is working and how it might be improved. This splits the sample up into blocks ordered by performance, such as 5 groups where Group 1 is 0-20th percentile, Group 2 is 21-40th, etc. We expect the smartest group to have a high proportion of examinees selecting the correct answer and low proportion selecting the distractors, and vise versa. You can see how this aligns with the concept of point-biserial. An example of this is below. Note that the P and point-biserial for the correct answer serve as “the” statistics for the item as a whole. The P for the item is called the item difficulty or facility statistic.

Examples of a distractor analysis

Here is an example of a good item. The P is medium (67% correct) and the Rpbis is strongly positive for the correct answer while strongly positive for the incorrect answers. This translates to a clean quantile plot where the curve for the correct answer (B) goes up while the curves for the incorrect answers go down. An ideal situation.

Now contrast that with the following item. Here, only 12% of examinees got this correct, and the Rpbis was negative. Answer C had 21% and a nicely positive Rpbis, as well as a quantile curve that goes up. This item should be reviewed to see if C is actually correct. Or B, which had the most responses. Most likely, this item will need a total rewrite!

Note that an item can be extremely difficult but still perform well. Here is an example where the distractor analysis supports continued use of the item. The distractor is just extremely attractive to lower students; they think that a confectioner makes confetti, since those two words look the closest. Look how strong the Rpbis is here, and very negative for that distractor. This is a good result!

Nathan Thompson earned his PhD in Psychometrics from the University of Minnesota, with a focus on computerized adaptive testing. His undergraduate degree was from Luther College with a triple major of Mathematics, Psychology, and Latin. He is primarily interested in the use of AI and software automation to augment and replace the work done by psychometricians, which has provided extensive experience in software design and programming. Dr. Thompson has published over 100 journal articles and conference presentations, but his favorite remains https://scholarworks.umass.edu/pare/vol16/iss1/1/ .