What are enemy items?

Enemy items is a psychometric term that refers to two test questions (items) which should not be on the same test form (if linear) seen by a given examinee (if LOFT or adaptive). This can therefore be relevant to linear forms, but also pertains to linear on the fly testing (LOFT) and computerized adaptive testing (CAT). There are several reasons why two items might be considered enemies:

- Too similar: the text of the two items is almost the same

- One gives away the answer to the other

- The items are on the same topic/answer, even if the text is different.

How do we find enemy items?

There are two ways (as there often is): Manual and Automated.

Manual means that humans are reading items and intentionally mark two of them as enemies. So maybe you have a reviewer that is reviewing new items from a pool of 5 authors, and finds two that cover the same concept. They would mark them as enemies.

Automated means that you have a machine learning algorithm, such as one which uses natural language processing (NLP) to evaluate all items in a pool and then uses distance/similarity metrics to quantify how similar they are. Of course, this could miss some of the situations, like if two items have the same topic but have fairly different text. It is also difficult to do if items have formulas, multimedia files, or other aspects that could not be caught by NLP.

Why are enemy items a problem?

This violates the assumption of local independence; that the interaction of an examinee with an item should not be affected by other items. It also means that the examinee is in double jeopardy; if they don’t know that topic, they will be getting two questions wrong, not one. There are other potential issues as well, as discussed in this article.

What does this mean for test development?

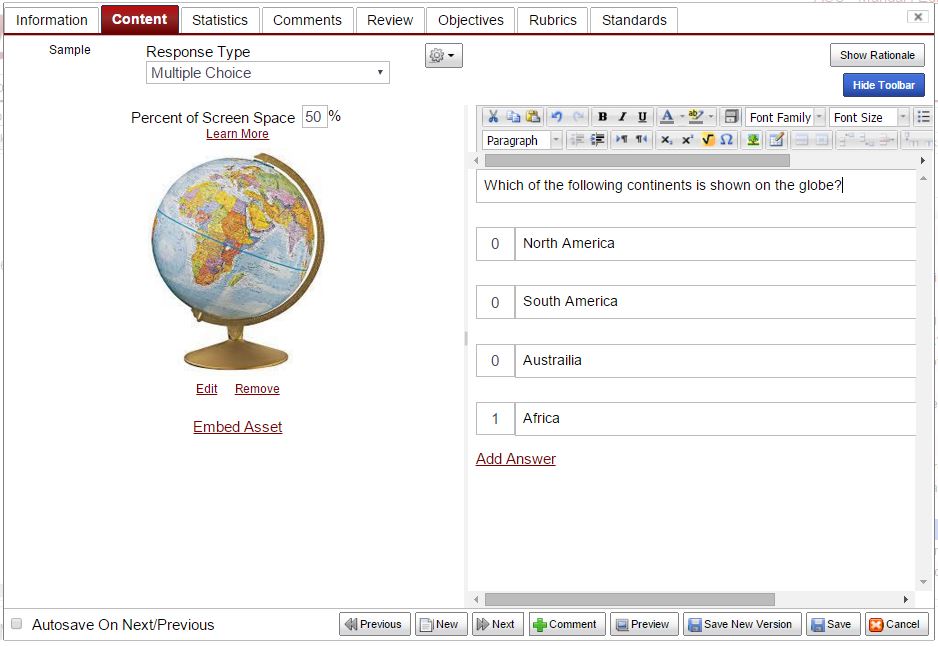

We want to identify enemy items and ensure that they don’t get used together. Your item banking and assessment platform should have functionality to track which items are enemies. You can sign up for a free account in FastTest to see an example.

Nathan Thompson, PhD

Latest posts by Nathan Thompson, PhD (see all)

- Psychometrics: Data Science for Assessment - June 5, 2024

- Setting a Cutscore to Item Response Theory - June 2, 2024

- What are technology enhanced items? - May 31, 2024