Response Time Effort

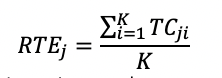

Wise and Kong (2005) defined an index to flag examinees not putting forth minimal effort, based on their response time. It is called the response time effort (RTE) index. Let K be the number of items in the test. The RTE for each examinee j is

where TCji is 1 if the response time on item i exceeds some minimum cutpoint, and 0 if it does not.

How do I interpret Response Time Effort?

This therefore evaluates the proportion of items for which the examinee spent less time than the specified cutpoint, and therefore ranges from 0 to 1. You, as the researcher, needs to decide what that cutpoint is: 10 second, 30 seconds… what makes sense for your exam? It is then interpreted as an index of examinee engagement. If you think that each item should take at least 20 seconds to answer (perhaps an average of 45 seconds), and Examinee X took less than 20 seconds on half the items, then clearly they were flying through and not giving the effort that they should. Examinees could be flagged like this for removal from calibration data. You could even use this in real time, and put a message on the screen “Hey, stop slacking, and answer the questions!”

How do I implement RTE?

Want to calculate Response Time Effort on your data? Download the free software SIFT. SIFT provides comprehensive psychometric forensics, flagging examinees with potential issues such as poor motivation, stealing content, or copying amongst examinees.

Nathan Thompson, PhD

Latest posts by Nathan Thompson, PhD (see all)

- Psychometrics: Data Science for Assessment - June 5, 2024

- Setting a Cutscore to Item Response Theory - June 2, 2024

- What are technology enhanced items? - May 31, 2024