Bloom’s Taxonomy and Cognitive Levels in Assessment: A Key to Effective Testing

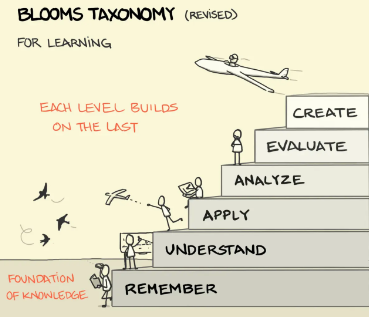

Bloom’s Taxonomy is a hierarchical classification of cognitive levels ranging from lower to higher order thinking, which provides a valuable framework for test development. The development of effective assessments is a cornerstone of educational practice, essential for measuring student achievement and informing instructional decisions, and effective use of Bloom’s Taxonomy can improve the validity of assessments.

Why use Bloom’s Taxonomy in Assessment?

By integrating Bloom’s Taxonomy into processes like test blueprint and item design, educators can create assessments that not only evaluate basic knowledge but also foster critical thinking, problem solving, and the application of concepts in new contexts (generalization).

Utilizing Bloom’s Taxonomy in test blueprints involves aligning learning objectives with their corresponding cognitive levels (remembering, understanding, applying, analyzing, evaluating, creating). A test blueprint serves as a strategic plan that outlines the distribution of test items across various content areas and cognitive skills. By mapping each learning objective to a specific level of Bloom’s Taxonomy educators ensure a balanced assessment that reflects the intended curriculum emphasis. This alignment guarantees that the test measures a range of abilities, from factual recall (remembering) to complex analysis and synthesis (analyzing – evaluating).

In item design, Bloom’s Taxonomy guides the creation of test questions that target specific cognitive levels. For example, questions aimed at the “understanding” level might ask students to generate a paraphrase from a given passage, while those at the “applying” level could present real-world scenarios requiring the used of the learned principles (e.g. Calculating the perimeter of a rectangle to know how many meters of fencing to buy). Higher – order questions at the “analyzing”, “evaluating” or “creating” levels, challenge students to distinguish between arguments, critique methodologies, or design original solutions. This deliberate crafting of items ensures that assessments are not disproportionately focused on lower order skills, but promote deeper cognitive engagement.

Moreover, incorporating Bloom’s Taxonomy into test development enhances the validity and reliability of assessments and aids in identifying specific areas where students may struggle, allowing for targeted instructional interventions. By fostering a comprehensive evaluation of both foundational knowledge and advanced thinking skills, Bloom’s Taxonomy contributes to more meaningful assessments that support student growth, achievement, certification among other types of assessments.

Bloom’s Taxonomy is an important tool in developing educational assessments with validity, by targeting the content to the appropriate complexity for the target population. In the world of psychometrics and assessments, understanding cognitive levels is essential for creating effective exams that accurately measure a candidate’s knowledge, skills, and abilities. Cognitive levels, often referred to as levels of cognition, are typically categorized into a hierarchy that reflects how deeply an individual understands and processes information. This concept is foundational in education and testing, including professional certification exams, where assessing not just knowledge but how it is applied is critical.

One of the most widely recognized frameworks for cognitive levels is Bloom’s Taxonomy, developed by educational psychologist Benjamin Bloom in the 1950s. Bloom’s Taxonomy classifies cognitive abilities into six levels, ranging from basic recall of facts to more complex evaluation and creation of new knowledge. For exam creators, this taxonomy is valuable because it helps design assessments that challenge different levels of thinking.

Here’s an overview of the cognitive levels in Bloom’s Taxonomy, with examples relevant to assessment design.

1. Remembering

This is the most basic level of cognition and involves recalling facts or information. In the context of an exam, this could mean asking candidates to memorize specific definitions or procedures.

- Example: “Define the term ‘psychometrics.’”

- Exam Insight: While important, questions that assess only the ability to remember facts may not provide a full picture of a candidate’s competence. They’re often used in conjunction with higher-level questions to ensure foundational knowledge.

2. Understanding

The next level involves comprehension—being able to explain ideas or concepts. Rather than simply recalling information, the test-taker demonstrates an understanding of what the information means.

- Example: “Explain the difference between formative and summative assessments.”

- Exam Insight: Understanding questions help gauge whether a candidate can interpret concepts correctly, which is essential for fields like psychometrics, where understanding testing methods and principles is key.

3. Applying

Application involves using information in new situations. This level goes beyond understanding by asking candidates to apply their knowledge in a practical context.

- Example: “Given a set of psychometric data, identify the most appropriate statistical method to analyze test reliability.”

- Exam Insight: This level of cognition is crucial in high-stakes exams, especially in certification contexts where candidates must demonstrate their ability to apply theoretical knowledge to real-world scenarios.

4. Analyzing

At this level, candidates are expected to break down information into components and explore relationships among the parts. Analysis questions often require deeper thinking and problem-solving skills.

- Example: “Analyze the factors that could lead to bias in a psychometric assessment.”

- Exam Insight: Analytical skills are critical for assessing a candidate’s ability to think critically about complex issues, which is essential in roles like test development or evaluation in assessment ecosystems.

5. Evaluating

Evaluation involves making judgments about the value of ideas or materials based on criteria and standards. This might include comparing different solutions or assessing the effectiveness of a particular approach.

- Example: “Evaluate the effectiveness of different psychometric models for ensuring the fairness of certification exams.”

- Exam Insight: Evaluation questions are typically found in advanced assessments, where candidates are expected to critique processes and propose improvements. This level is vital for ensuring that individuals in leadership roles can make informed decisions about the tools they use.

6. Creating

The highest cognitive level is creation, where candidates generate new ideas, products, or solutions based on their knowledge and analysis. This level requires innovative thinking and often asks for the synthesis of information.

- Example: “Design an assessment framework that incorporates both traditional and modern psychometric theories.”

- Exam Insight: Creating-level questions are rare in most standardized tests but may be used in specialized certifications where innovation and leadership are critical. This level of cognition assesses whether the candidate can go beyond existing knowledge and contribute to the field in new and meaningful ways.

Bloom’s Taxonomy and Cognitive Levels in High-Stakes Exams

When designing high-stakes exams—such as those used for professional certifications or employment tests—it’s important to strike a balance between assessing lower and higher cognitive levels. While remembering and understanding provide insight into the candidate’s foundational knowledge, analyzing and evaluating help gauge their ability to think critically and apply knowledge in practical scenarios.

For example, a psychometric exam for certifying test developers might include questions across all cognitive levels:

- Remembering: Questions that assess knowledge of psychometric principles; basic definitions, processes, and so on.

- Understanding: Questions that ask for an explanation of item response theory.

- Applying: Scenarios where candidates must apply these theories to improve test design.

- Analyzing: Situations where candidates analyze a poorly performing test item.

- Evaluating: Questions that ask candidates to critique the use of certain assessment methods.

- Creating: Tasks where candidates design new assessment tools.

Bloom’s Taxonomy and Learning Psychometrics: An applied example for test blueprint development.

Developing a test blueprint using Bloom’s Taxonomy ensures that the assessments in psychometrics effectively measures a range of cognitive skills. Here is an example of how you can apply Bloom’s Taxonomy to create a comprehensive test blueprint for a course in psychometrics.

Step 1. Identify content strands and their cognitive demands

Let’s consider the following strands and their corresponding cognitive demand:

| Content Strand | Cognitive Demand |

| Foundations of Psychometrics | Remembering: Recall basic definitions and concepts in psychometrics.

Understanding: Explain fundamental principles and their significance. |

| Classical Test Theory | Understanding: Describe components of CTT.

Applying: Use CTT formulas to compute test scores. Analyzing: Interpret the implications of test scores and error. |

| Item Response Theory | Understanding: Explain the basics of IRT models.

Applying: Apply IRT to analyze test items. Analyzing: Compare IRT with CTT in terms of advantages and limitations. |

| Reliability and Validity | Understanding: Define different types of reliability and validity.

Evaluating: Assess the reliability and validity of given tests. Analyzing: Identify factors affecting reliability and validity. |

| Test development and standardization | Applying: Develop test items following psychometric principles.

Creating: Design a basic blueprint. Evaluating: Critique test items for bias and fairness. |

Step 2. Use Bloom’s Taxonomy to elaborate a test blueprint

Using Bloom’s Taxonomy, we can create a test blueprint that aligns the content strands with appropriate cognitive levels.

This would be test blueprint table:

| Content strand | Bloom’s Level | Learning objective | # items | Item type |

| Foundational of Psychometrics | Remembering | Recall definitions and concepts | 5 | Multiple-choice |

| Understanding | Explain principles and their significance | 4 | Short-answer | |

| Classical Test Theory | Understanding | Describe components of CTT | 3 | True/False with explanation |

| Applying | Compute test scores using CTT formulas | 5 | Calculation problems | |

| Analyzing | Interpret test scores and error implications | 4 | Data analysis questions | |

| Item Response Theory | Understanding | Explain basics of IRT models | 3 | Matching |

| Applying | Analyze test items using IRT | 4 | Problem solving | |

| Analyzing | Compare IRT and CTT | 3 | Comparative essays | |

| Reliability and Validity | Understanding | Define types of reliability and validity | 4 | Fill-in-the-blank |

| Analyzing | Identify factors affecting reliability and validity | 4 | Case studies | |

| Evaluating | Assess the reliability and validity of tests | 5 | Critical evaluations | |

| Test development and standardization | Applying | Develop test items using psychometric principles | 4 | Item writing exercises |

| Creating | Design a basic test blueprint | 2 | Project-based tasks | |

| Evaluating | Critique test items for bias and fairness | 3 | Peer review assignments |

By first delineating the content strands and their associated cognitive demands, and then applying Bloom’s Taxonomy, educators can develop a test blueprint that is both systematic and effective. This method ensures that assessments are comprehensive, balanced, and aligned with educational goals, ultimately enhancing the measurement of student learning in psychometrics.

Benefits of this approach include:

- Comprehensive Coverage: Ensures all important content areas and cognitive skills are assessed.

- Balanced Difficulty: Provides a range of item difficulties to discriminate between different levels of student performance.

- Enhanced Validity: Aligns assessment with learning objectives, improving content validity.

- Promotes Higher-Order Thinking: Encourages students to engage in complex cognitive processes.

Conclusion: Why Cognitive Levels Matter in Assessments

Cognitive levels play a crucial role in assessment design. By incorporating questions that target different levels of cognition, exams can provide a more complete picture of a candidate’s abilities.

By aligning exam content with cognitive levels, you ensure that your assessments are not just measuring rote memorization but the full spectrum of cognitive abilities—from basic understanding to advanced problem-solving and creativity. This creates a more meaningful and comprehensive evaluation process for both candidates and employers alike.

References:

- Bloom, B. S. (1956). Taxonomy of Educational Objectives: The Classification of Educational Goals. New York: Longmans, Green.

- Anderson, L. W., & Krathwohl, D. R. (2001). A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives.

Autor: Fernando Austria, PHD

Fernando Austria Corrales

Latest posts by Fernando Austria Corrales (see all)

- Bloom’s Taxonomy and Cognitive Levels in Assessment: A Key to Effective Testing - October 30, 2024

- What is a Learning Management System (LMS)? - October 5, 2024