Test Development

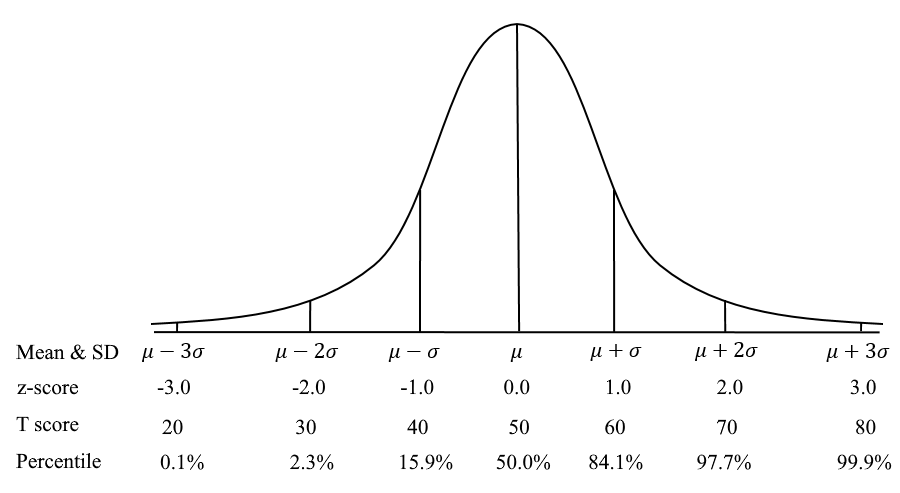

What is a T score in Assessment?

A T Score is a conversion of scores on a test to a standardized scale with a mean of 50 and standard deviation of 10. This is a common example of a scaled score in

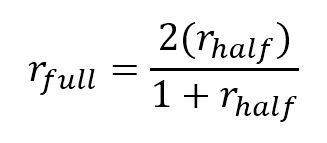

What is the Spearman-Brown formula?

The Spearman-Brown formula, also known as the Spearman-Brown Prophecy Formula or Correction, is a method used in evaluating test reliability. It is based on the idea that split-half reliability has better assumptions than coefficient alpha

Item Writing: Tips for Authoring Test Questions

Item writing (aka item authoring) is a science as well as an art, and if you have done it, you know just how challenging it can be! You are experts at what you do, and

Validity Threats and Psychometric Forensics

Validity threats are issues with a test or assessment that hinder the interpretations and use of scores, such as cheating, inappropriate use of scores, unfair preparation, or non-standardized delivery. It is important to establish a

Item Review Workflow for Exam Development

Item review is the process of ensuring that newly-written test questions go through a rigorous peer review, to ensure that they are high quality and meet industry standards. What is an item review workflow? Developing

Job Task Analysis Study: Why essential for Certification?

Job Task Analysis (JTA) is a formal process to define what is being done in a profession, especially what is most important or frequent, and then using this information to make data-driven decisions. It is

Question and Test Interoperability (QTI)

Question and Test Interoperability® (QTI®) is a set of standards around the format of import/export files for test questions in educational assessment and HR/credentialing exams. This facilitates the movement of questions from one software platform

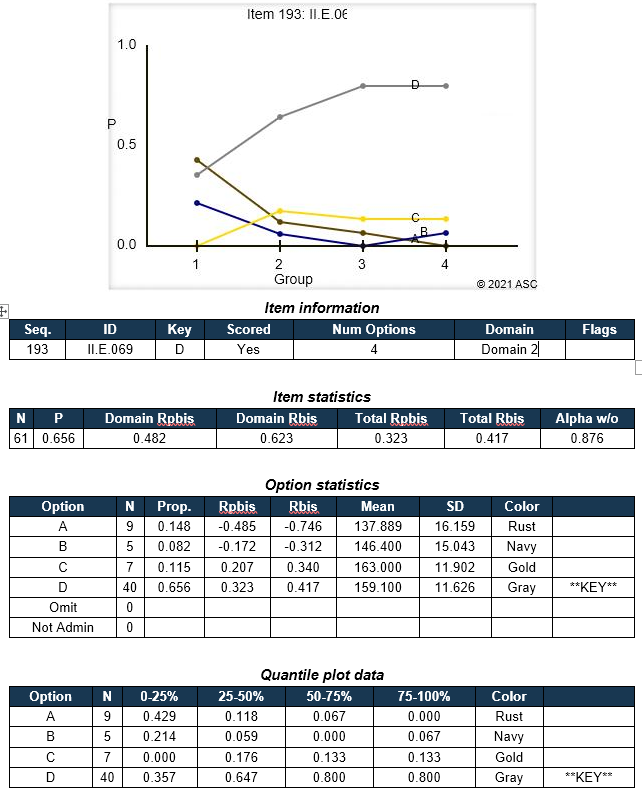

Classical Test Theory: Item Statistics

Classical Test Theory (CTT) is a psychometric approach to analyzing, improving, scoring, and validating assessments. It is based on relatively simple concepts, such as averages, proportions, and correlations. One of the most frequently used aspects

Classical Test Theory vs. Item Response Theory

Classical Test Theory and Item Response Theory (CTT & IRT) are the two primary psychometric paradigms. That is, they are mathematical approaches to how tests are analyzed and scored. They differ quite substantially in substance

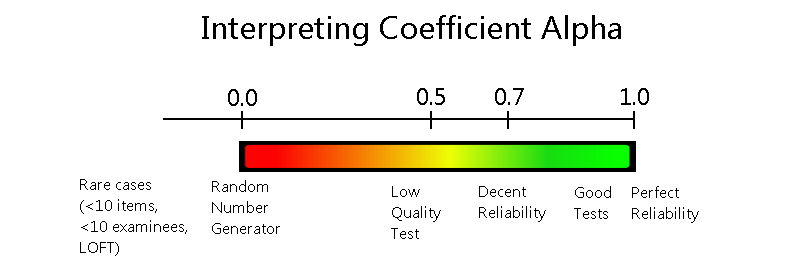

Coefficient Alpha Reliability Index

Coefficient alpha reliability, sometimes called Cronbach’s alpha, is a statistical index that is used to evaluate the internal consistency or reliability of an assessment. That is, it quantifies how consistent we can expect scores to

“Dichotomous” Vs “Polytomous” in IRT?

What is the difference between the terms dichotomous and polytomous in psychometrics? Well, these terms represent two subcategories within item response theory (IRT) which is the dominant psychometric paradigm for constructing, scoring and analyzing assessments.

Meta-analysis in Assessment

Meta-analysis is a research process of collating data from multiple independent but similar scientific studies in order to identify common trends and findings by means of statistical methods. To put it simply, it is a

Test validation: How to determine if a test score is supported?

Test validation is the process of verifying whether the specific requirements to test development stages are fulfilled or not, based on solid evidence. In particular, test validation is an ongoing process of developing an argument

Automated Item Generation

Automated item generation (AIG) is a paradigm for developing assessment items (test questions), utilizing principles of artificial intelligence and automation. As the name suggests, it tries to automate some or all of the effort involved

Borderline group method standard setting

The borderline group method of standard setting is one of the most common approaches to establishing a cutscore for an exam. In comparison with the item-centered standard setting methods such as modified-Angoff, Nedelsky, and Ebel,

Ebel Method of Standard Setting

The Ebel method of standard setting is a psychometric approach to establish a cutscore for tests consisting of multiple-choice questions. It is usually used for high-stakes examinations in the fields of higher education, medical and health

Item Parameter Drift

Item parameter drift (IPD) refers to the phenomenon in which the parameter values of a given test item change over multiple testing occasions within the item response theory (IRT) framework. This phenomenon is often relevant

Distractor Analysis for Test Items

Distractor analysis refers to the process of evaluating the performance of incorrect answers vs the correct answer for multiple choice items on a test. It is a key step in the psychometric analysis process to