One of the most cliche phrases associated with assessment is “teaching to the test.” I’ve always hated this phrase, because it is only used in a derogatory matter, almost always by people who do not understand the basics of assessment and psychometrics. I recently saw it mentioned in this article on PISA, and that was one time too many, especially since it was used in an oblique, vague, and unreferenced manner.

So, I’m going to come out and say something very unpopular: in most cases, TEACHING TO THE TEST IS A GOOD THING.

Why teaching to the test is usually a good thing

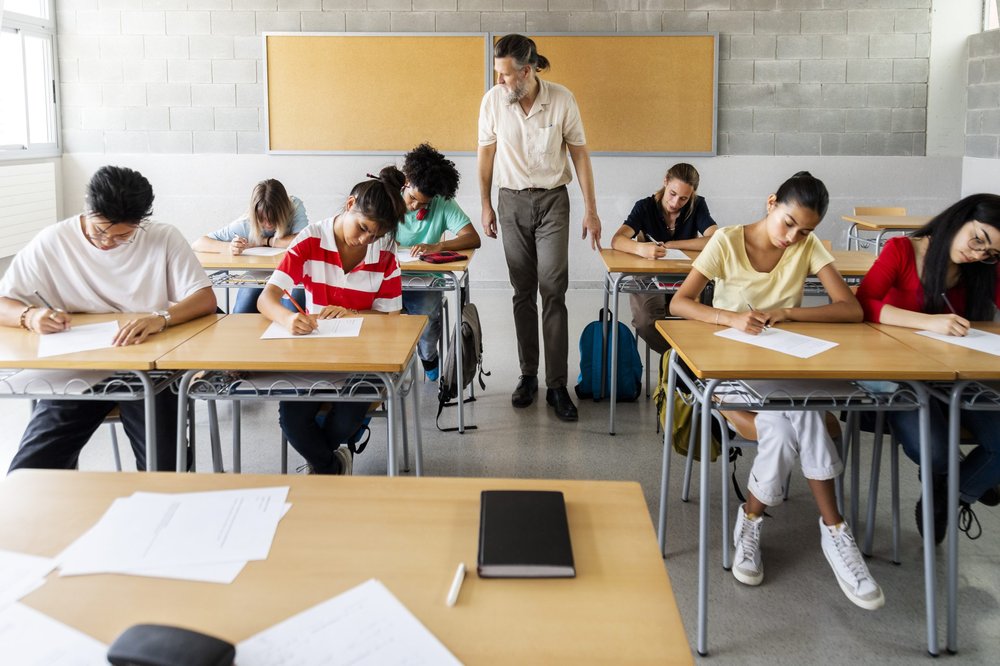

If the test reflects the curriculum – which any good test will – then someone who is teaching to the test will be teaching to the curriculum. Which, of course, is the entire goal of teaching. The phrase “teaching to the test” is used in an insulting sense, especially because the alliteration is resounding and sellable, but it’s really not a bad thing in most cases. If a curriculum says that 4th graders should learn how to add and divide fractions, and the test evaluates this, what is the problem? Especially if it uses modern methodology like adaptive testing or tech-enhanced items to make the process more engaging and instructional, rather than oversimplifying to a text-only multiple choice question on paper bubble sheets?

The world of credentialing assessment, this is an extremely important link. Credential tests start with a job analysis study, which surveys professionals to determine what they consider to be the most important and frequently used skills in the job. This data is then transformed into test blueprints. Instructors for the profession, as well as aspiring students that are studying to pass the test, then focus on what is in the blueprints. This, of course, still contains the skills that are most important and frequently used in the job!

So what is the problem then?

Now, telling teachers how to teach is more concerning, and more likely to be a bad thing. Finland does well because it gives teachers lots of training and then power to choose how they teach, as noted in the PISA article.

As a counterexample, my high school math department made an edict starting my sophomore year tha t all teachers had to use the “Chicago Method.” It was pure bunk and based on the fact that students should be doing as much busy work as possible instead of the teachers actually teaching. I think it is because some salesman convinced the department head to make the switch so that they would buy a thousand brand new textbooks. The method makes some decent points (here’s an article from, coincidentally, when I was a sophomore in high school) but I think we ended up with a bastardization of it, as the edict was primarily:

t all teachers had to use the “Chicago Method.” It was pure bunk and based on the fact that students should be doing as much busy work as possible instead of the teachers actually teaching. I think it is because some salesman convinced the department head to make the switch so that they would buy a thousand brand new textbooks. The method makes some decent points (here’s an article from, coincidentally, when I was a sophomore in high school) but I think we ended up with a bastardization of it, as the edict was primarily:

- Assign students to read the next chapter in class (instead of teaching them!); go sit at your desk.

- Assign students to do at least 30 homework questions overnight, and come back tomorrow with any questions they have.

- Answer any questions, then assign them the next chapter to read. Whatever you do, DO NOT teach them about the topic before they start doing the homework questions. Go sit at your desk.

Isn’t that preposterous? Unsurprisingly, after two years of this, I went from being a leader of the Math Team to someone who explicitly said “I am never taking Math again”. And indeed, I managed to avoid all math during my senior year of high school and first year of college. Thankfully, I had incredible professors in my years at Luther College, leading to me loving math again, earning a math major, and applying to grad school in psychometrics. This shows the effect that might happen with “telling teachers how to teach.” Or in this case, specifically – and bizarrely – to NOT teach.

What about all the bad tests out there?

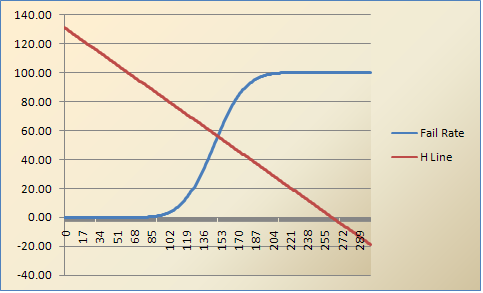

Now, let’s get back to the assumption that a test does reflect a curriculum/blueprints. There are, most certainly, plenty of cases where an assessment is not designed or built well. That’s an entirely different problem, and is an entirely valid concern. I have seen a number of these in my career. This danger why we have international standards on assessments, like AERA/APA/NCME and NCCA. These provide guidelines on how a test should be build, sort of like how you need to build a house according to building code and not just throwing up some walls and a roof.

For example, there is nothing that is stopping me from identifying a career that has a lot of people looking to gain an edge over one another to get a better job… then buying a textbook, writing 50 questions in my basement, and throwing it up on a nice-looking website to sell as a professional certification. I might sell it for $395, and if I get just 100 people to sign up, I’ve made $39,500!!!! This violates just about every NCCA guideline, though. If I wanted to get a stamp of approval that my certification was legit – as well as making it legally defensible – I would need to follow the NCCA guidelines.

My point here is that there are definitely bad tests out there, just like there are millions of other bad products in the world. It’s a matter of caveat emptor. But just because you had some cheap furniture on college that broke right away, doesn’t mean you swear off on all furniture. You stay away from bad furniture.

There’s also the problem of tests being misused, but again that’s not a problem with the test itself. Certainly, someone making decisions is uninformed. It could actually be the best test in the world, with 100% precision, but if it is used for an invalid application then it’s still not a good situation. For example, if you took a very well-made exam for high school graduation and started using it for employment decisions with adults. Psychometricians call this validity – that we have evidence to support the intended use of the test and interpretations of scores. It is the #1 concern of assessment professionals, so if a test is being misused, it’s probably by someone without a background in assessment.

So where do we go from here?

Put it this way, if an overweight person is trying to become fitter, is success more likely to come from changing diet and exercise habits, or from complaining about their bathroom scale? Complaining unspecifically about a high school graduation assessment is not going to improve education; let’s change how we educate our children to prepare them for that assessment, and ensure that the assessment reflects the goals of the education. Nevertheless, of course, we need to invest in making the assessment as sound and fair as we can – which is exactly why I am in this career.