Digital assessment (DA) aka e-Assessment or electronic assessment is the delivery of assessments, tests, surveys, and other measures via digital devices such as computers, tablets, and mobile phones. The primary goal is to be able to develop items, publish tests, deliver tests, and provide meaningful results – as quickly, easily, and validly as possible. The use of computers enables many modern benefits, from adaptive testing (e.g. adaptive SAT) to tech-enhanced items. To deliver digital assessment, an organization typically implements cloud-based digital assessment platforms.

Such platforms do much more than just the delivery though, and modules include:

- Item banking – authoring, review, metadata, assets

- Online test delivery – including sophisticated security options

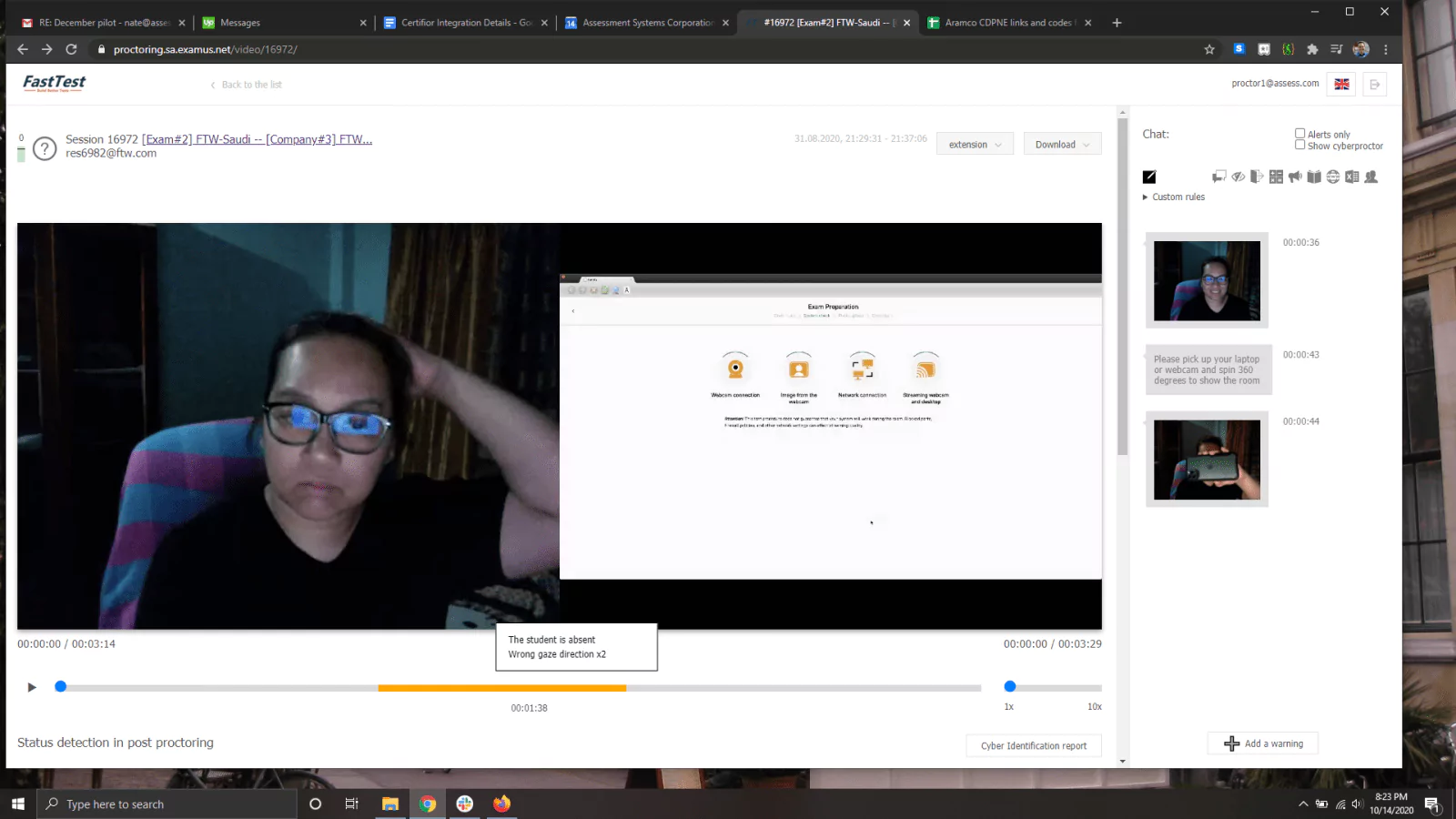

- Remote proctoring – live, AI, recorded, or BYOP

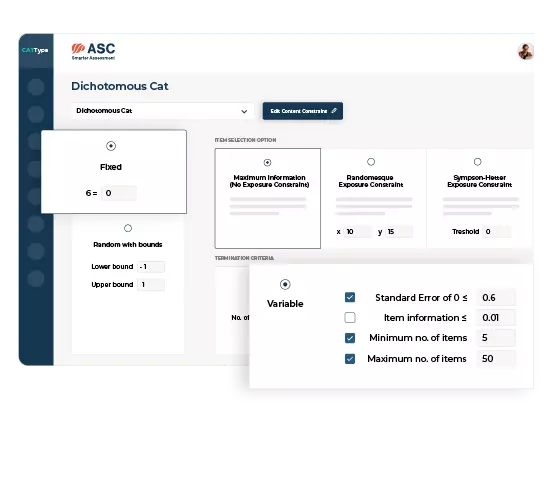

- Adaptive testing – modern psychometrics like IRT

- Essay scoring – manage the marking of thousands of students

- Reporting – Easily access raw data and aggregate reports for insight

Want to see a professional-grade digital assessment platform, or request a free account? Here is more info on our Assess.ai.

Why Digital Assessment / e-Assessment?

Globalization and digital technology are rapidly changing the world of education, human resources, and professional development. Teaching and learning are becoming more learner-centric, and technology provides an opportunity for assessment to be integrated into the learning process with corresponding adjustments. Furthermore, digital technology grants opportunities for teaching and learning to move their focus from content to critical thinking. Teachers are already implementing new strategies in classrooms, and assessment needs to reflect these changes, as well.

Advantages of Digital Assessment

Better Measurement

Digital assessment enables the use of technology to make the exams better. This can happen in various ways. One of the most common is tech-enhanced items, which are more interactive and assess a deeper level of knowledge. Digital assessment can also make use of assets such as videos or audios. And of course, smarter exams like computerized adaptive testing are only possible on digital devices.

Accessibility

One of the main pros of DA is the ease-of-use for staff and learners—examiners can easily set up questionnaires, determine grading methods, and send invitations to examinees. In turn, examinees do not always have to be in a classroom setting to take assessments and can do it remotely in a more comfortable environment. In addition, DA gives learners the option of taking practice tests whenever they are available for that. It also enables very fast republishing and improvement.

Transparency

DA allows educators quickly evaluate performance of a group against an individual learner for analytical and pedagogical reasons. Report-generating capabilities of DA enable educators to identify learning problem areas on both individual and group levels soon after assessments occur in order to adapt to learners’ needs, strengths, and weaknesses. As for learners, DA provides them with instant feedback, unlike traditional paper exams.

Profitability

Conducting exams online, especially those at scale, seems very practical since there is no need to print innumerable question papers, involve all school staff in organization of procedures, assign invigilators, invite hundreds of students to spacious classrooms to take tests, and provide them with answer-sheets and supplementary materials. Thus, flexibility of time and venue, lowered human, logistic and administrative costs lend considerable preeminence to electronic assessment over traditional exam settings.

Eco-friendliness

In this digital era, our utmost priority should be minimizing detrimental effects on the environment that pen-and-paper exams bring. Mercilessly cutting down trees for paper can no longer be the norm as it has the adverse environmental impact. DA will ensure that organizations and institutions can go paper-free and avoid printing exam papers and other materials. Furthermore, DAs take up less storage space since all data can be stored on a single server, especially in respect to keeping records in paper.

Security

Enhanced privacy for students is another advantage of digital assessment that validates its utility. There is a tiny probability of malicious activities, such as cheating and other unlawful practices that can potentially rig the system and lead to incorrect results. Secure assessment system supported by AI-based proctoring features makes students embrace test results without contesting them, which, in turn, fosters a more positive mindset toward institutions and organizations building a stronger mutual trust between educators and learners. There are many more options as well: lockdown browser, preventing re-entry or re-takes, scheduling windows, proctor passwords, etc.

Autograding

The benefits of DA include setting up an automated grading system, more convenient and time-efficient than standard marking and grading procedures, which minimizes human error. Automated scoring juxtaposes examinees’ responses against model answers and makes relevant judgements. The dissemination of technology in e-education and the increasing number of learners demand a sophisticated scoring mechanism that would ease teachers’ burden, save a lot of time, and ensure fairness of assessment results. For example, digital assessment platforms can include complex modules for essay scoring, or easily implement item response theory and computerized adaptive testing.

Time-efficiency

Those involved in designing, managing and evaluating assessments are aware of the tediousness of these tasks. Probably, the most routine process among assessment procedures is manual invigilation which can be easily avoided by employing proctoring services. Smart exam software, such as FastTest, features the options of automated item generation, item banking, test assembling and publishing, saving precious time that would otherwise be wasted on repetitive tasks. Examiners should only upload the examinees’ emails or ids to invite them for assessment. The best part about it all is instant exporting of results and delivering reports to stakeholders.

Public relations and visibility

There is a considerably lower use of pen and paper in the digital age. The infusion of technology has considerably altered human preferences, so these days an immense majority of educators rely more on computers for communication, presentations, digital designing, and other various tasks. Educators have an opportunity to mix question styles on exams, including graphics, to make them more interactive than paper ones. Many educational institutions utilize learning management systems (LMS) for publishing study materials on the cloud-based platforms and enabling educators to evaluate and grade with ease. In turn, students benefit from such systems as they can submit their assignments remotely.

Challenges of Implementing Digital Assessment

Difficulty in grading long-answer questions

DA copes brilliantly with multiple-choice questions; however, there are still some challenges with grading long-answer questions. This is where Digital e-Assessment intersects with the traditional one as subjective answers ask for manual grading. Luckily, technology in the education sector continues to evolve and even essays can already be marked digitally with a help of AI-features on the platforms like Assess.ai.

Susceptibility to cheating

Examinees can use AI plugins designed to answer multiple choice questions in common platforms like Moodle. They can also use LLMs to write their essays then easily paste them into the answer box. Neither of these are possible with paper exams.

Need to adapt

Implementing something new always brings disruption and demands some time to familiarize all stakeholders with it. Obviously, transition from traditional assessment to DA will require certain investments to upgrade the system, such as professional development of staff and finances. Some staff and students might even resist this tendency and feel isolated without face-to-face interactions. However, this stage is inevitable and will definitely be a step forward for both educators and learners.

Infrastructural barriers & vulnerability

One of the major cons of DA is that technology is not always reliable and some locations cannot provide all examinees with stable access to electricity, internet connection, and other basic system requirements. This is a huge problem in developing nations, and still remains a problem in many areas of well-developed nations. In addition, integrating DA technology might be very costly in case of wrong strategies while planning assessment design, both conceptual and aesthetic. Such barriers hamper DA, which is why authorities should consider addressing them prior to implementing DA.

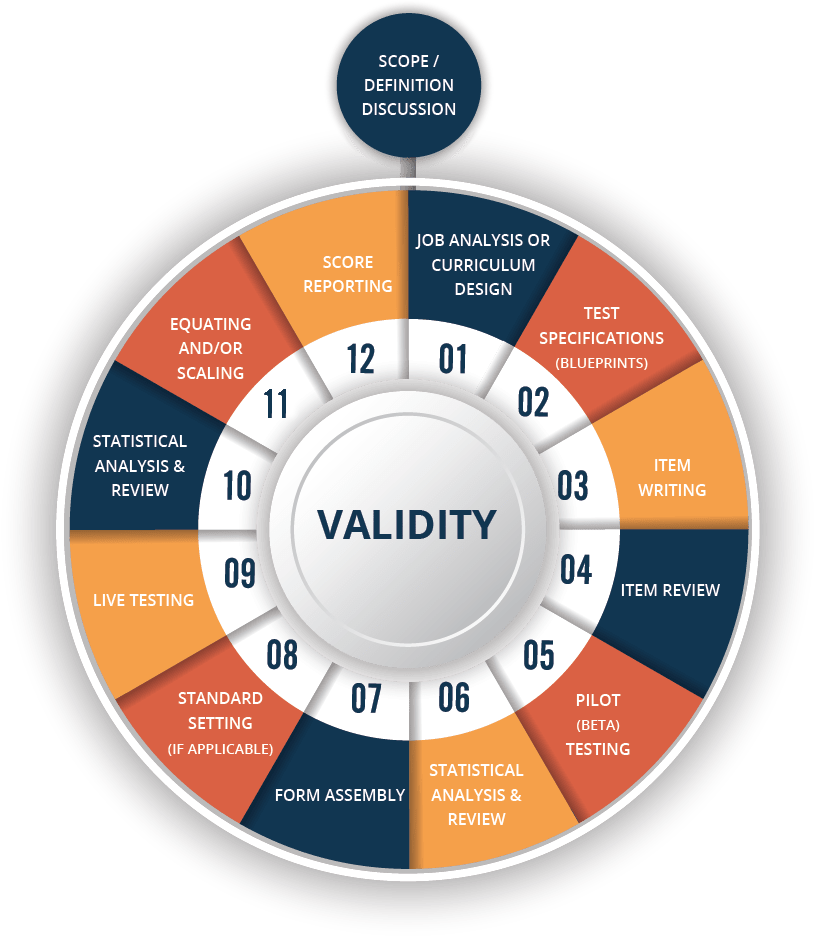

Selecting a Digital Assessment Platform

Digital Assessment is a critical component in education and workforce assessment, managing and delivering exams via the internet. It requires a cloud-based platform that is designed specifically to build, deliver, manage, and validate exams that are either large-scale or high-stakes. It is a critical core-business tool for high-stakes professional and educational assessment programs, such as certification, licensure, or university admissions. There are many, many software products out in market that provide at least some functionality for online testing.

The biggest problem when you start shopping is that there is an incredible range in quality, though there are also other differentiators, such as some being made only to deliver pre-packaged employment skill tests rather than being for general usage. This article provides some tips on how to implement e-assessment more effectively.

Type of e-Assessment tools

So how do you know what level of quality you need in an e-Assessment solution? It mostly depends on the stakes of your test, which governs the need for quality in the test itself, which then drives the need for a quality platform to build and deliver the test. This post helps you identify the types of functionality that set apart “real” online exam platforms, and you can evaluate which components are most critical for you once you go shopping.

This table depicts one way to think about what sort of solution you need.

| Non-professional level | Professional level | |

| Not dedicated to assessment | Systems that can do minimal assessment and are inexpensive, such as survey software (LimeSurvey, QuestionPro, etc.) | Related systems like LMS platforms that are high quality (Blackboard, Canvas); these have some assessment functionality but lack professional functionality like IRT, adaptive testing, and true item banking |

| Dedicated to assessment | Systems designed for assessment but without professional functionality; anybody can make a simple platform for MCQ exams etc. | Powerful systems designed for high-stakes exams, with professional functionality like IRT/CAT |

What is a professional assessment platform, anyway?

A true e-Assessment system is much more than an exam module in a learning management system (LMS) or an inexpensive quiz/survey maker. A real online exam platform is designed for professionals, that is, someone whose entire job is to make assessments. A good comparison is a customer relationship management (CRM) system. That is a platform that is designed for use be people whose job is to manage customers, whether for existing customers or to manage the sales process. While it is entirely possible to use a spreadsheet to manage such things at a small scale, all organizations doing any sort of scale will leverage a true CRM like SalesForce or Zoho. You wouldn’t hire a team of professional sales experts and then have them waste hours each day in a spreadsheet; you would give them SalesForce to make them much more effective.

The same is true for online testing and assessment. If you are a teacher making math quizzes, then Microsoft Word might be sufficient. But there are many organizations that are doing a professional level of assessment, with dedicated staff. Some examples, by no means an exhaustive list:

- Professional credentialing: Certification and licensure exams that a person passes to work in a profession, such as chiropractors

- Pre-Employment: Evaluating job applicants to make sure they have relevant skills, ability, and experience

- Universities: Not for classroom assessments, but rather for topics like placement exams of all incoming students, or for nationwide admissions exams

- K-12 benchmark: If you are a government that tests all 8th graders at the end of the year, or a company that delivers millions of formative assessments

Goal 1: Item banking that makes your team more efficient

True item banking: The platform should treat items as reusable objects that exist with persistent IDs and metadata. Learn more about item banking.

Configurability: The platform should allow you to configure how items are scored and presented, such as font size, answer layout, and weighting. There is also often the option to connect to a certification management system.

Multimedia management: Audio, video, and images should be stored in their own banks, with their own metadata fields, as reusable objects. If an image is in 7 questions, you should not have to upload 7 times… you upload once and the system tracks which items use it.

Statistics and other metadata: All items should have many fields that are essential metadata: author name, date created, tests which use the item, content area, Bloom’s taxonomy, classical statistics, IRT parameters, and much more.

Custom fields: You should be able to create any new metadata fields that you like.

Item review workflow: Professionally built items will go through a review process, like Psychometric Review, English Editing, and Content Review. The platform should manage this, allowing you to assign items to people with due dates and email notifications.

Standard Setting: The exam platform should include functionality to help you do standard setting like the modified-Angoff approach.

Automated item generation: There should be functionality for automated item generation.

Powerful test assembly: When you publish a test, there should be many options, including sections, navigation limits, paper vs online, scoring algorithms, instructional screens, score reports, etc. You should also have aids in psychometric aspects, such as a Test Information Function.

Equation Editor: Many math exams need a professional equation editor to write the items, embedded in the item authoring.

Goal 2: Professional-grade exam delivery

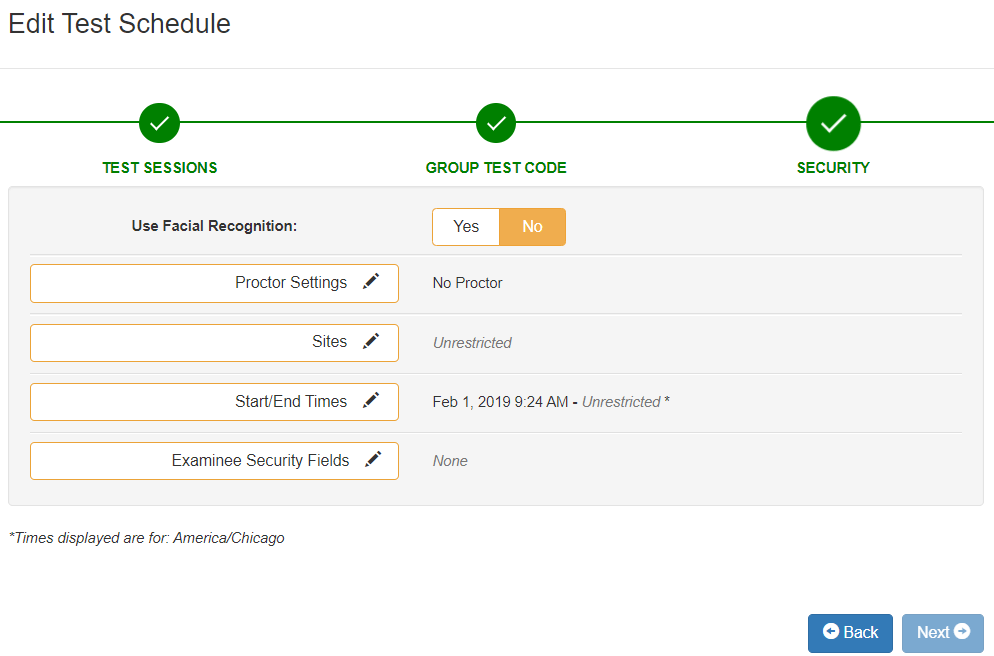

Scheduling options: Date ranges for availability, retake rules, alternate forms, passwords, etc. These are essential for maintaining the integrity of high stakes tests.

Item response theory: Item response theory modern psychometric paradigm used by organizations dedicated to stronger assessment. It is far superior to the oversimplified, classical approach based on proportions and correlations.

Linear on the fly testing (LOFT): Suppose you have a pool of 200 questions, and you want every student to get 50 randomly picked, but balanced so that there are 10 items from each of 5 content areas. This is known as linear-on-the-fly testing, and can greatly enhance the security and validity of the test.

Computerized adaptive testing: This uses AI and machine learning to customize the test uniquely to every examinee. Adaptive testing is much more secure, more accurate, more engaging, and can reduce test length by 50-90%.

Tech-enhanced item types: Drag and drop, audio/video, hotspot, fill-in-the-blank, etc.

Scalability: Because most “real” exams will be doing thousands, tens of thousands, or even hundreds of thousands of examinees, the online exam platform needs to be able to scale up.

Online essay marking: The e-Assessment platform should have a module to score open-response items. Preferably with advanced options, like having multiple markers or anonymity.

Goal 3: Maintaining test integrity and security during e-Assessment

Delivery security options: There should be choices for how to create/disseminate passcodes, set time/date windows, disallow movement back to previous sections, etc.

Lockdown browser: An option to deliver with software that locks the computer while the examinee is in the test.

Remote proctoring: There should be an option for remote (online) proctoring. This can be AI, record and review, or live.

Live proctoring: There should be functionality that facilitates live human proctoring, such as in computer labs at a university. The system might have Proctor Codes or a site management module.

User roles and content access: There should be various roles for users, as well as options to limit them by content. For example, limiting a Math teacher doing reviews to do nothing but review Math items.

Rescoring: If items are compromised or challenged, you need functionality to easily remove them from scoring for an exam, and rescore all candidates

Live dashboard: You should be able to see who is in the online exam, stop them if needed, and restart or re-register if needed.

Goal 4: Powerful reporting and exporting

Support for QTI: You should be able to import and export items with QTI, as well as common formats like Word or Excel.

Psychometric analytics & data visualization: You should be able to see reports on reliability, standard error of measurement, point-biserial item discriminations, and all the other statistics that a psychometrician needs. Sophisticated users will need things like item response theory.

Exporting of detailed raw files: You should be able to easily export the examinee response matrix, item times, item comments, scores, and all other result data.

API connections: You should have options to set up APIs to other platforms, like an LMS or CRM.

General Considerations

Ease-of-Use: As Albert Einstein said, Everything should be made as simple as possible, but no simpler. The best e-Assessment software is one that offers sophisticated solutions in a way that anyone can use. Power users should be able to leverage technology like adaptive testing, while there should also be simpler roles for item writers or reviewers.

Integrations: Your platform should integrate with learning management systems, job applicant tracking systems, certification management systems, or whatever other business operations software is important to you.

Support and Training: Does the platform have a detailed manual? Bank of tutorial videos? Email support from product experts? Training webinars?

OK, now how do I find a Digital Assessment platform that fits my needs?

If you are out shopping, ask about the aspects in the list above. For sure, make sure to check the websites for documentation on these. There is a huge range out there, from free survey software up to multi-million dollar platforms.

Want to save yourself some time? Click here to request a free account in our platform.

Conclusion

To sum up, implementing DA has its merits and demerits, as outlined above. Even though technology simplifies and enhances many processes for institutions and stakeholders, it still has some limitations. Nevertheless, all possible drawbacks can be averted by choosing the right methodology and examination software. We cannot reject the necessity to transit from traditional form of assessment to digital one, admitting that the benefits of DA outweigh its drawbacks and costs by far. Of course, it is up to you to choose whether to keep using hard copy assessments or go for online option. However, we believe that in the digital era all we need to do is to plan wisely and choose an easy-to-use and robust examination platform with AI-based anti-cheating measures, such as Assess.ai, to secure credible outcomes.

Reference

Laila Issayeva earned her BA in Mathematics and Computer Science at Aktobe State University and Master’s in Education at Nazarbayev University. She has experience as a math teacher, school leader, and as a project manager for the implementation of nationwide math assessments for Kazakhstan. She is currently pursuing a PhD in psychometrics.